When more competitors means less harvested resource

Beating your neighbor to the berry patch

Abstract

Recommendation: posted 19 November 2021, validated 22 November 2021

Munoz, F. (2021) When more competitors means less harvested resource. Peer Community in Ecology, 100088. https://doi.org/10.24072/pci.ecology.100088

Recommendation

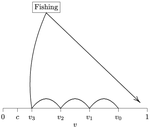

In this paper, Alan R. Rogers (2021) examines the dynamics of foraging strategies for a resource that gains value over time (e.g., ripening fruits), while there is a fixed cost of attempting to forage the resource, and once the resource is harvested nothing is left for other harvesters. For this model, not any pure foraging strategy is evolutionary stable. A mixed equilibrium exists, i.e., with a mixture of foraging strategies within the population, which is still evolutionarily unstable. Nonetheless, Alan R. Rogers shows that for a large number of competitors and/or high harvesting cost, the mixture of strategies remains close to the mixed equilibrium when simulating the dynamics. Surprisingly, in a large population individuals will less often attempt to forage the resource and will instead “go fishing”. The paper also exposes an experiment of the game with students, which resulted in a strategy distribution somehow close to the theoretical mixture of strategies.

The economist John F. Nash Jr. (1950) gained the Nobel Prize of economy in 1994 for his game theoretical contributions. He gave his name to the “Nash equilibrium”, which represents a set of individual strategies that is reached whenever all the players have nothing to gain by changing their strategy while the strategies of others are unchanged. Alan R. Rogers shows that the mixed equilibrium in the foraging game is such a Nash equilibrium. Yet it is evolutionarily unstable insofar as a distribution close to the equilibrium can invade.

The insights of the study are twofold. First, it sheds light on the significance of Nash equilibrium in an ecological context of foraging strategies. Second, it shows that an evolutionarily unstable state can rule the composition of the ecological system. Therefore, the contribution made by the paper should be most significant to better understand the dynamics of competitive communities and their eco-evolutionary trajectories.

References

Nash JF (1950) Equilibrium points in n-person games. Proceedings of the National Academy of Sciences, 36, 48–49. https://doi.org/10.1073/pnas.36.1.48

Rogers AR (2021) Beating your Neighbor to the Berry Patch. bioRxiv, 2020.11.12.380311, ver. 8 peer-reviewed and recommended by Peer Community in Ecology. https://doi.org/10.1101/2020.11.12.380311

The recommender in charge of the evaluation of the article and the reviewers declared that they have no conflict of interest (as defined in the code of conduct of PCI) with the authors or with the content of the article. The authors declared that they comply with the PCI rule of having no financial conflicts of interest in relation to the content of the article.

Evaluation round #3

DOI or URL of the preprint: https://doi.org/10.1101/2020.11.12.380311

Version of the preprint: 6

Author's Reply, 27 Jul 2021

Decision by François Munoz , posted 23 Jul 2021

, posted 23 Jul 2021

Dear colleague,

François Massol has provided comments and answers to the points you addressed in your last revision.

I concur that the manuscript deserves a revision in the light of these insightful comments.

We look forward receiving your answers and revised manuscript.

Best wishes,

François

Reviewed by Francois Massol , 23 Jul 2021

, 23 Jul 2021

As the third review of A. Rogers' paper, I concentrated on answering his comments to my earlier comments:

Point 2: I think there is a little misunderstanding on theorems and definitions here. The conditions

Pi(I,I) = Pi(J,J) and Pi(I,J) > Pi(J,J) are not BC's theorem, but rather the formal definition of an ESS (not necessarily mixed) by Maynard Smith and Price. BC's theorem states, as the author quotes well in the text (after eq. 8), that all pure strategies fare equally well against the mixed ESS. What 3.2 proves is that if one looks for the ESS using BC's theorem, one can find one such mixed ESS and it is (by construction) unique. There is no need to prove that an equilibrium found using BC's theorem is an ESS because it is exactly what BC's theorem proves already. Because all pure strategies fare equally well against the mixed strategy, no change of the "composition" of the mixed strategy can invade and thus the mixed strategy is an ESS.

Point 3: I still maintain that inequality (14) is not making a step in the right direction, for the reasons given above and below (basically: 3.2 already proves the ESS and the comparison of fitness is not the right one if one wants to look for invasibility).

Point 4: the fact that populations are finite is essential indeed. If you were to remove this assumption, then a population J^1I^K-1 would be essentially a population I^K (with K to infinity), and thus there would be no difference between inequality (14) and Pi(I,I^K) > Pi(J,I^K), which is exactly what is implied in 3.2 (because you used BC's theorem to find the mixed ESS I and following the definition of an ESS).

Overall, I very strongly suggest that the author reconsiders his manuscript based on these arguments. I think the serious piece that is still amiss is the invasibility of other strategies by the mixed ESS, and this is probably the missing piece of the puzzle that might explain why simulations converge or not towards the ESS. At the moment, I cannot be more positive about the revision -- my major criticisms still hold. I have had very little time to consider doing the maths of Pi(I,J^K)-P(J,J^K) as I suggested last time, but I would be happy to try it in the future if the author does not feel comfortable doing it.

https://doi.org/10.24072/pci.ecology.100187.rev31

Evaluation round #2

DOI or URL of the preprint: https://doi.org/10.1101/2020.11.12.380311

Version of the preprint: 5

Author's Reply, 24 Jun 2021

Decision by François Munoz , posted 14 Jun 2021

, posted 14 Jun 2021

Dear Alan,

Thank you for the revision work you have performed in response to the first round of review.

Two of the original reviewers have read the new version carefully, and some important points on the way to define ESS and NE in the context of the study still require attention.

Therefore, I would be grateful if you could revise your manuscript in order to address the points raised by reviewer 1.

We are certain that the study is valuable, and the new round of revision should make the message even clearer and stronger.

Sincerely,

François

Reviewed by Francois Massol , 29 Apr 2021

, 29 Apr 2021

For this second reading, I chose to forget everything I wrote last time, just to make sure that any math mistake did not slip by me (so I re-computed everything, like last time).

I haven't found "formula issues" (e.g. misnamed integral boundaries or similar typos), but I have some major remarks that I think are going to turn the whole "ESS or not" conundrum upside-down:

1. Starting at section 3.1, the reasoning is presented as a search for NE and/or ESS, but it is not obvious what criteria are being used at each step along the way. Just to make sure we're all on the same boat:

* ESS means not invadable by other strategies (their payoffs against the ESS are always worse or equal to the payoff of the ESS against a population of itself);

* NE means a coalition of strategies (pure or mixed) in which no player can do better (have a higher payoff) by just changing its strategy, knowing the strategies of all the other players in advance. An ESS is a particular NE (since it's not invadable by any other strategy, no player will knowingly change its strategy), but particular nonetheless insofar as all players have the same strategy.

Section 3.1 then makes two points:

* there are no pure ESS (OK for me);

* NE among pure strategies are not interesting because they would require coordination.

Actually, the second point is not exact: in reality, there cannot be any NE consisting only of pure strategies. To realize that, imagine that A players have gone fishing and K + 1 - A have chosen a variety of v values. Obviously, any one of the K+1-A individuals that has not chosen the smallest v could have done better by going fishing. So now imagine that A = K and thus only one player tries to harvest. If it has strategy v>c, then all fishermen can do better by choosing any harvesting strategy between c and v, and the harvester can always do better if v < 1. So there can't be any NE consisting only of pure strategies, except for the very trivial one (everybody but one player going fishing and the last one harvesting at v = c).

2. In sections 3.2 and 3.3, the mixing up of the two arguments is more pronounced: what is proved in 3.2 is that we can find a mixed strategy that is an ESS, using BC theorem to find it. The strategy defined by eqs (9), (10), (11) and (13) is a mixed ESS, and thus it is also a particular instance of NE (everybody has the same strategy, but just does not play the same due to stochasticity, and no other strategy can invade).

3. The point that section 3.3 is trying to insert is not a useful one: resisting invasion in game theory means being an ESS. Since the strategy defined above is an ESS, it does resist invasion by others. The problem with section 3.3 is that it is based on the prologue of appendix A, which tries to compare w_J and w_I based on the probability that I or J encounters groups of I only or with the inclusion of one J. However, this is a very bad reasoning:

* imagine that J is rare and say we are looking at the probability that the group of players will count 0, 1 or 2 J players (to make things simple). Let's call these probabilities k_0, k_1 and k_2 (attention: these are probabilities of encountering the whole group, not its individual players). Now let's call P_0(I), P_0(J), P_1(I) and P_1(J) the respective probabilities for an I player to compete against K I players, for a J player to compete against K I players, for an I player to compete against 1 J and K-1 I players, and for a J player to compete against 1 J and K-1 I players. Given the k_i's defined before, we obtain:

P_0(I) proportional to k_0

P_0(J) proportional to k_1 (the group needs to have exactly one J player)

P_1(I) proportional to k_1 (you need to find exactly one J player to compete against)

P_1(J) proportional to 2 k_2 (you need to find two J players, one for the focal and one for the unique J opponent, and since players are not labelled there are two combinations of this configuration for each group containing two J players)

As you see, the P_0 of I and J are not the same. And the P_1 of I and J are also completely different... So the whole reasoning presented at the beginning of appendix A is false. But this is not a problem since what it was trying to reproduce in the first place is the ESS reasoning that you have already performed!! (and which proves that I is an ESS)

* Just to convince you completely, there is also the argument of the quantities to compare: Pi(I, J^1I^K) vs. Pi(J, J^1I^K). If K = 1 (i.e. there are only two players per arena), then the comparison turns out to be Pi(I,J) against Pi(J,J)... How can such a comparison inform us about the invasion of I by J (since Pi(J,J) is about the still non-existent case in which two rare-strategy players would compete against each other)? In fact, this is touching the crucial part: the comparison induced by BC theorem (i.e. Pi(J,I^K) vs Pi(I,I^K)) is the only one that counts when J is rare.

So, to sum up points 1-3:

* the question of Nash equilibria does not seem very intersting in your context because...

* ... you can prove that there are no NE made of pure strategies (my first point) and...

* ... you have proved that there exists a mixed ESS (section 3.2), which is also necessarily a NE

* and thus it is not a mystery that simulations should converge towards the ESS.

4. In the simulations, you mention the existence of "oscillations" when 2^K*c < 1. However, since the points raised by appendix A are now moot, I guess this effect has more to do with the question of invaisibility by I: given a mixed strategy J, close to I, can I invade? (see Eshel 1983 on the CSS criterion)

To look at this question, you need to compare Pi(I,J^K) with Pi(J,J^K). I have made the computation myself, using first-order approximations (i.e. Taylor series of "J-I" to the first order) and I find a difference of payoffs exactly equal to 0, which means that the difference is a quadratic form (you need to push to the second order). I have begun the calculation of the second order, but it's more tedious than what I expected... I'd be happy to try to finish this if needed, but since you managed the calculations of appendix A, you're probably more skilled than I am to perform this. Just two quick tips:

- to perturb strategy I (i.e. to quantify how J is different from I), you need two variations, not just one as you did in appendix A: you need the prerturbation on Q and the perturbation on the foraging time distrbution

- to be able to apply Euler / integration by parts, you need to perturb a function that is "attached" to both boundaries (c and 1). It is not the case for function s because there is no clear attachment of the function in 1 (s(1) = Q, so if you "attach" s(1), you also attach Q). I think the best choice of function to perturb is r = (s-Q)/(1-Q) which goes from 1 to 0 as v goes from c to 1.

My intuition is that the quadratic difference of payoffs Pi(I,J^K) - Pi(J,J^K) will be a saddle or even a hill (and not a trough) when 2^K*c < 1 (or some similar inequality is met), which might explain why it takes you long to approach the ESS -- because the ESS cannot readily invade nearby strategies, the simulations are stuck circling around it. In case of a saddle, it means strategy I can only invade following one "direction" (Q or the distribution of foraging times); with a hill, the ESS is not approachable at all.

N.B.: the fact that strategy I can invade nearby strategies (J close to I) does not say anything about the ability of I to invade ANY strategy. In particular, it is interesting to look at strategy I trying to invade pure strategies, or a mix of pure strategies (but then it means a situation that is out of equilibrium). This would be a bit easier (because pure strategies have payoffs that do not involve integrals), but I haven't performed the calculations.

Again, my apologies for not catching these points in the earlier version of the paper. I still think it's a worthwile study, but it will be better with these points corrected.

https://doi.org/10.24072/pci.ecology.100187.rev21

Reviewed by Jeremy Van Cleve , 21 May 2021

, 21 May 2021

Evaluation round #1

DOI or URL of the preprint: https://doi.org/10.1101/2020.11.12.380311

Version of the preprint: 4

Author's Reply, 12 Apr 2021

Decision by François Munoz , posted 01 Mar 2021

, posted 01 Mar 2021

The ms of Rogers presents a game theoretical model addressing the evolutionary dynamics of foraging behaviour when an resource is increasingly rewarding when ripening (parameter v varying between 0 and V when the resource ripens) while an alternative resource exists but is less valuable ("go fishing", parameter c).

3 reviewers have carefuly examined the model, and provided insighful comments and suggestion on the study.

They and I concur that the study should be interesting for a broad audience of evolutionary biologists and ecologists reading PCI Ecology.

Please consider the following major points in a revised version of the ms:

- The 3 reviewers noted that the reference to Maynard-Smith is not most suited and/or can be somewhat misleading.

They suggest referring to the Bishop-Cannings theorems. In addition, stating that foraging ecology has little to say about game theory would be exaggerated. Some references are proposed for the later point.

- Another important point is whether and how the maths, the simulations and the lab experiments together support the main point of the paper on the evolutionary trajectory to mixed strategy.

Apparent inconsistency between analytical expectation on non-stability and observed "approachability" in simulations needs further explanation and discussion.

Regarding the lab experiment, I share the concern that the model assumptions and the specific experimental conditions should be cautiously discussed. It would be misleading to suggest that the experiment demonstrates or even illustrates the theoretical results without discussing the limitations of such comparison.

- The point by reviewer 1 that the model could be analyzed in a context of Adaptive Dynamics also needs discussion.

Please carefuly address these points and the other relevant points exposed in the reviews.

We look forward receiving a revised version of this very interesting work for further consideration at PCI Ecology.

Reviewed by anonymous reviewer 1, 25 Jan 2021

The ms by Rogers reports an evolutionary game study to analyze the foraging behavior. The author uses different approaches: classroom experiment and theoretical model. The theoretical model is analyzed using both analytical criteria for strategy invasion and simulations. The ms is motivated by the behavior of foragers, emphasizing that foraging is fundamentally a game model i.e. the payoff of a player with a given strategy depends on the strategy of others. This is indeed a relevant approach. The ms is interesting in that it attempts to address an ecological question in a conceptual way. It is however not exactly true to write that foraging ecology has little to say about game theory. Maynard Smith models (e.g. Hawk dove) can be interpreted as foraging models. Also, Bulmer (Theoretical Evolutionary Ecology, p168) discuss and analyzes a foraging model. Beyond this, I was bit puzzled by the different approach that are not completely consistent. First, there is a mix of “intuitive” mathematical arguments and analytical treatments of the question. I have to admit that I may have missed some arguments in the math. What strikes me is that the fact that the mixed strategy was not found to be evolutionary stable in the analytical treatments but was found to be “approachable” in the simulations. To me, this is an important caveat in the ms and questions the role of the analytical treatment. Is the mathematical treatment relevant with regards to biology? If not, what is the key mathematical assumption that fails to describe the biological phenomenon? This is clearly not enough discussed in the ms. Would it be possible that the size of mutation in the analytical treatment and in the simulation causes this discrepancy? Second, it is always interesting to confront experiment and theory but to me, it seems premature to interpret the classroom experiment in the light of the model. I also found risky to interpret “ecological or ethnographical examples in the light of a model whose dynamics is not totally understood. In an historical perspective, the hawk dove model is a sort of metaphor that can help to interpret empirical patterns. In the present case, the ms oscillates between a sort of mechanistic model (close to ecological mechanism) and heuristic model.

https://doi.org/10.24072/pci.ecology.100187.rev11Reviewed by Francois Massol , 06 Jan 2021

, 06 Jan 2021

Summary

This paper presents a game theoretic model of a kind of contest competition for ripening resources. The author develops a simple, mathematically tractable version of the model and also exposes results of a classroom experiment and evolutionary simulations. Overall, theoretical and empirical results seem to generally agree, with the surprising result that more and more contestants “go fishing” when the number of competitors increases. The Nash equilibrium, which is evolutionarily unstable, looks like a good approximation for the evolutionary outcome, for reasons that are not totally clear yet.

Evaluation of the manuscript

My general appreciation is that this paper is well-written, quite clear about its objectives, results and interpretations, and detailed enough to be reproducible / replicated. That being written, I have some remarks aimed at helping the author improve the manuscript. Some of them are really suggestions of tweaks that could possibly make the model more realistic or less prone to paradoxical results. I’m well aware we all have only 24 hours in a day, so please do take these suggestions as such, not as strict requirements for your study to be considered as valid.

I have to disclose that I did not dig a lot in the literature on game theory to check whether a similar model had already been described somewhere or not – from memory, I have not read any study on a model similar to this one, but my expertise might be too limited to judge the novelty of the study. I checked all the equations of the papers and re-did all analytical calculations, and I did not find any mistake.

My first major remark concerns the theoretical background against which some of the big results are framed. The book of Maynard Smith is cited at various locations in the ms to justify the payoff equality between pure strategies at the NE (or potential ESS). However, this result is classically known as one of Bishop-Cannings theorems (Bishop and Cannings, 1976). This might be slightly more appropriate. Also, in the same vein, section 3.3 and appendix B could make use of the term “evolutionarily stable coalition” instead of the vague “evolutionary equilibrium”. The use of “coalition” in this context was probably first introduced in the literature by Brown & Vincent (1987), to the best of my knowledge. Other useful references from the 80’s theoretical biology literature could also be read on this topic (Thomas, 1984, Hines, 1987)

For the reader more knowledgeable about evolutionary ecology models than game theory fundamentals, it looks like the model could be amenable to an adaptive dynamics (AD) analysis (Hofbauer and Sigmund, 1990), i.e. considering v, or some characteristics of the distribution of v (i.e. its expectation, variance, etc.), as an inheritable trait and looking at the selection gradient for this trait, considering payoffs as a proxy for evolutionary fitness (i.e. the number of offspring sired given the strategy of the parent). The analysis of singular strategies (sensu Adaptive Dynamics) would lead to the discovery of the same Nash equilibrium I think, but the second-order analyses (checking for convergence stability and evolutionary stability through the second derivatives of the invasion fitness function) might help understand what happens next, i.e. the switch from the NE to a coalition of strategies. Using mutational processes closer to those classically used in AD (instead of the ones described on page 8) might also help. A classic primer on this is the paper by Geritz et al. (1998). The evolution of infinitely dimensional traits, such as distributions, has been considered in this context (Gomulkiewicz and Beder, 1996). I have not tried the AD approach on this particular model, but prior work I did with some colleagues on a similar problem, i.e. the evolution of exploration time in the context of imperfect information about the quality of resource patches when good-quality patches are limited (Schmidt et al., 2015), suggests it can be done. Take this as a suggestion for potentially elucidating what happens in coalitions, not a strict requirement for this study.

To understand what happens in the classroom experiment, would it be possible to invoke risk aversion and the necessity, in real-life situations, for the game to be repeated? If long-term fitness depends on the geometric average of payoffs gained through such games, it might be advantageous to limit losses, more so than trying to obtain the best average gains. Or conversely, one might consider than v’s less than c could emerge out of spite (if e.g. a player had been beaten in an earlier game)? More generally, the presentation of the mathematical model begins with astute considerations regarding the application of a one-shot game; it would be quite useful to get such considerations back on the table when discussing the interpretation of the discrepancies between classroom experiments and the analytical model. Repeated games, as well as more realistic situations involving relatedness among competitors (with consequently closer than expected strategies), might affect the evolutionary outcome.

Maybe at some point it might be interesting to point assumptions that are ecologically questionable (since PCI ecology is about ecology in the first place). I’m thinking e.g. of the fact that choosing v might be less simple than choosing the time to harvest resource; if the two quantities are not 100% linearly correlated, this can create enough stochasticity to select for more prudent strategies. The cost of acquiring information might also come into play (at least in the form of a non-constant opportunity cost “the longer I wait, the more time I could have spent fishing”). Finally, ecologically realistic situations probably involve the existence of multiple berry patches and a handling time for picking berries, which might limit the opportunities for too strong inequality in payoffs among competitors after one season. Overall, all of these assumptions question some of the applicability of the present model. All models are wrong, so this does not bother me that much, but at least it is better to present all of these points and go through them and their potential consequences for the study results, rather than ignore them altogether.

In the same vein as my previous point, I was wondering whether it could be possible to look at what happens when ties are not exact. In other words, the experimental part of the study states that ties divide the gain equally between all competitors that chose the right time for harvest; in the analytical part, this rule is not useful because time is considered continuous and players are assumed to play mixed strategies, but what would happen if sufficiently close decision times occurred? (i.e. if A acts at time t and B acts at time t + epsilon, how should the bounty be split, assuming the time to pick all the berries is larger than epsilon?) Would this additional rule solve the paradox of the non-evolutionarily stable NE? Again, this is more like a suggestion, but some bizarre model results sometimes do not resist the addition of more realistic assumptions (here, I’m thinking that there is a “tie rule” for the classroom experiment, but none in the actual analytical model – if ties are clearly having a psychological effects on players, this might explain the excess of very short times in the classroom).

Technical remarks:

Equations (9-13) are not commented, or barely. It could be useful e.g. to assess whether Q increases with K, c, etc. And the same thing for, v_bar, Pi, … This absence of comment is especially acute when one arrives at the top of page 11, where comments on functions are at last given – the reader is then left wondering whether the same exercise would have been possible earlier, i.e. on equations (9-13) rather than equations (16-19).

You should give a more substantial legend to Table 1, probably incorporating all of footnote 1 to explain what D means.

In Figure 6, the legend is not self-sufficient (the values of K, c, are missing). Also the x-axis scale (between 0 and 10) is weird at this point in the text. One has to get back to the way the classroom experiment is written to remember why there is this difference…

The use of “unstable” instead of “evolutionarily unstable” is a bit problematic sometimes because the two forms of stability (classic dynamical system stability and evolutionary stability) can be studied together in models like this one.

In the integrals of equation (22), v is both the upper bound of integration and the variable to integrate over. You should use two different symbols.

The reasoning between, inequality (23) and Q > ½ is not obvious. It involves a little bit of analysis. Since this is an appendix, you might as well write it down (briefly).

The transition just after equations (24-25) reads “adding and subtracting c”, but in reality you immediately recover these equations by using Q = 1 – the integral of f and distributing the integrals.

On pages 15-16, showing that D is maximum for J = I is actually quite stronger than proving that it is not an ESS – it means that all other mixed strategies can invade! Maybe this should be discussed a little.

The “Euler equation” is strange. Given the writing of Z as a function of s of v, I would use d/du rather than d/dx for the derivative with respect to the integrated variable. And you can also find this result using the same integration by parts trick used later to find the second derivative of D…You might as well explicit this computation directly.

Condition (27) is also true for a NE, so if the starting point of appendix B is a NE, you don’t have to prove that condition (27) is true, do you? But I don’t find your justification at the top of page 18 very convincing – to me this does not answer what happens when mixed strategies J try to invade, i.e. whether Pi(J,I^k) are equal to Pi(I,I^k). Using equations (31-33), however, helps you to prove it (you simply have to compose the Pi score based on those of all pure strategies).

I’d say that the factorisations you propose (equation [29] and the ensuing equations [31-33]) are OK if the random variable X yielding the actual pure strategy chosen by any player is not correlated to any other “environmental” random variable, i.e. if players do not synchronize their strategies through some external signal. Otherwise, you would have to condition B^K by I playing x in equation (31), i.e. the choice of the pure strategy chosen by the focal should partly determine the population of pure strategies it will play against. There might be some formal way of expressing this condition using correlations / independence of the various random variables.

Typos:

Line 3 on page 8: “in a homogeneous population” (no S at population)

Penultimate line of page 14: “if anD only if”

Literature cited

Bishop, D. T. & Cannings, C. (1976) Models of animal conflict. Advances in Applied Probability, 8, 616-621.

Brown, J. S. & Vincent, T. L. (1987) Coevolution as an evolutionary game. Evolution, 41, 66-79.

Geritz, S. A. H., Kisdi, E., Meszena, G. & Metz, J. A. J. (1998) Evolutionarily singular strategies and the adaptive growth and branching of the evolutionary tree. Evolutionary Ecology, 12, 35-57.

Gomulkiewicz, R. & Beder, J. H. (1996) The selection gradient of an infinite-dimensional trait. SIAM Journal on Applied Mathematics, 56, 509-523.

Hines, W. G. S. (1987) Evolutionary stable strategies: a review of basic theory. Theoretical Population Biology, 31, 195-272.

Hofbauer, J. & Sigmund, K. (1990) Adaptive dynamics and evolutionary stability. Applied Mathematics Letters, 3, 75-79.

Schmidt, K. A., Johansson, J., Kristensen, N., Massol, F. & Jonzén, N. (2015) Consequences of information use in breeding habitat selection on the evolution of settlement time. Oikos, 124, 69-80.

Thomas, B. (1984) Evolutionary stability: states and strategies. Theoretical Population Biology, 26, 49-67.

https://doi.org/10.24072/pci.ecology.100187.rev12