A handy “How to” review code for ecologists and evolutionary biologists

based on reviews by Serena Caplins and 1 anonymous reviewer

based on reviews by Serena Caplins and 1 anonymous reviewer

Implementing Code Review in the Scientific Workflow: Insights from Ecology and Evolutionary Biology

Abstract

Recommendation: posted 10 August 2023, validated 11 August 2023

Logan, C. (2023) A handy “How to” review code for ecologists and evolutionary biologists. Peer Community in Ecology, 100541. https://doi.org/10.24072/pci.ecology.100541

Recommendation

Ivimey Cook et al. (2023) provide a concise and useful “How to” review code for researchers in the fields of ecology and evolutionary biology, where the systematic review of code is not yet standard practice during the peer review of articles. Consequently, this article is full of tips for authors on how to make their code easier to review. This handy article applies not only to ecology and evolutionary biology, but to many fields that are learning how to make code more reproducible and shareable. Taking this step toward transparency is key to improving research rigor (Brito et al. 2020) and is a necessary step in helping make research trustable by the public (Rosman et al. 2022).

References

Brito, J. J., Li, J., Moore, J. H., Greene, C. S., Nogoy, N. A., Garmire, L. X., & Mangul, S. (2020). Recommendations to enhance rigor and reproducibility in biomedical research. GigaScience, 9(6), giaa056. https://doi.org/10.1093/gigascience/giaa056

Ivimey-Cook, E. R., Pick, J. L., Bairos-Novak, K., Culina, A., Gould, E., Grainger, M., Marshall, B., Moreau, D., Paquet, M., Royauté, R., Sanchez-Tojar, A., Silva, I., Windecker, S. (2023). Implementing Code Review in the Scientific Workflow: Insights from Ecology and Evolutionary Biology. EcoEvoRxiv, ver 5 peer-reviewed and recommended by Peer Community In Ecology. https://doi.org/10.32942/X2CG64

Rosman, T., Bosnjak, M., Silber, H., Koßmann, J., & Heycke, T. (2022). Open science and public trust in science: Results from two studies. Public Understanding of Science, 31(8), 1046-1062. https://doi.org/10.1177/09636625221100686

The recommender in charge of the evaluation of the article and the reviewers declared that they have no conflict of interest (as defined in the code of conduct of PCI) with the authors or with the content of the article. The authors declared that they comply with the PCI rule of having no financial conflicts of interest in relation to the content of the article.

This work was partially funded by the Center of Advanced Systems Understanding (CASUS), which is financed by Germany's Federal Ministry of Education and Research (BMBF) and by the Saxon Ministry for Science, Culture and Tourism (SMWK) with tax funds on the basis of the budget approved by the Saxon State Parliament. C.H.F. and J.M.C. were supported by NSF IIBR 1915347.

Evaluation round #1

DOI or URL of the preprint: https://doi.org/10.32942/X2CG64

Version of the preprint: 4

Author's Reply, 08 Aug 2023

Decision by Corina Logan , posted 24 Jul 2023, validated 24 Jul 2023

, posted 24 Jul 2023, validated 24 Jul 2023

Thank you for your wonderful “How to” article! It is a useful and concise read that should be helpful for many researchers. Two reviewers who have expertise in code sharing and/or promoting open research practices have provided very positive feedback and some helpful ideas that you might find useful to incorporate.

If you decide to incorporate the addition of co-authorship for code reviewers, as suggested by Reviewer 1, please also reference a guideline for authorship to ensure that researchers are aware of what the code reviewers would need to do to fully earn authorship. For example, according to the ICMJE guidelines (http://www.icmje.org/recommendations/browse/roles-and-responsibilities/defining-the-role-of-authors-and-contributors.html#two), authors need to have contributed to the development of the article AND the writing of the article. Therefore, a code reviewer could earn authorship if they review the code (contributing to the development of the article) AND they help with the editing of the article.

If you decide to incorporate the discussion around offering reviewers co-authorship, as suggested by Reviewer 1, please also provide ideas for how peer review processes can address the issue of it being very difficult to find enough reviewers in the first place. If the few people who accept reviews were to become co-authors because of their code reviewing work as part of the review process, then new reviewers would need to be recruited to be the reviewers of the article (because authors cannot review their own articles).

I have only a few minor comments:

- Line 109: perhaps change “and mistaking the column order” to “and producing a mistaken column order”

- Line 113: by “number” in “These errors are thought to scale with the number and complexity of code”, do you mean the number of lines? Or the number of code chunks? Or something else?

- Line 116: wow, I had no idea about identical() - what a useful tool!

- Figure 2: it’s nice that you suggest contacting the authors directly. This can save so much time in the peer review process and promotes collegial interactions

- Line 183: for some reason the URL https://github.com/pditommaso/awesome-pipeline is not working - the pdf seems to be cutting it off, which results in a 404 error

- Line 209: is Dryad free? I thought it cost money for authors to use it (which might be hidden by contracts Dryad has with publishers or universities)

- Figure 3: “Can my code be understood?” perhaps change to “Is my code understandable?”. I’m not sure what a style guide is - maybe it is in the resources you suggested for cleaning up code? Regardless, make it a bit more obvious what this piece is

- Line 276: this link is broken https://github.com/SORTEE/peer-277 code-review/issues/8

- Line 295: “not to get bogged down modifying or homogenising style” I would add “by” as in “bogged down by modifying”

- Line 338: “These benefits are substantial and could ultimately contribute to the adoption of code review during the publication process.” Adoption by whom? Journals?

A couple of things I’ve learned from my own open workflows that you might find useful for the article (of course, don’t feel pressured to include these just because I mentioned them):

1) THE easiest way I find to make my code runable by anyone anywhere is when I upload the data sheet to GitHub and reference it in the R code so it will easily run from anyone’s computer (see an example of the code here: https://github.com/corinalogan/grackles/blob/6c8930fcd66105b580809ef761d63b9cff0cbd83/Files/Preregistrations/g_flexmanip.Rmd#L233)

2) Line 209: consider adding the following data repository to your list: Knowledge Network for Biocomplexity (https://knb.ecoinformatics.org/). It is free, University-owned, and for ecology data, as well as being easily searchable because their metadata requirements are extensive (thus removing the need for researchers to remember all of the metadata they should be adding).

I look forward to reading the revision.

All my best,

Corina

Reviewed by anonymous reviewer 1, 11 Jul 2023

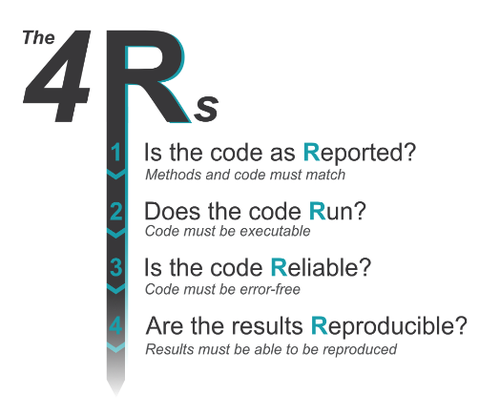

This commentary highlighted the lack of reproducibility as a long-lasting, systematical issue in ecology and evolutionary biology, where results heavily depend on statistical modeling and numerical simulations. The authors suggested a comprehensive guideline to include code review in the pre-submission, peer review, and post-publication process to ensure the validity and robustness of scientific conclusions. Together with other pioneers who advocate for reproducible research in psychology and computer science, the authors proposed a valuable and practical framework (i.e., 4Rs, flowchart for peer reviewers).

As an advocate for reproducible research myself, I agree entirely with the necessity and urgency to change the status quo in the publication process, which emphasizes and incentivizes too much on the scientific novelties, but comparably too little on the reproducibility. Despite being out of the scope of this paper, in an ideal world, scientific discoveries should be published only after stringent fact-checking and careful examination of the method. Before sweeping through the entire field of biology, the discipline of ecology and evolution and other computationally extensive disciplines could be a pioneering test ground for incorporating code review as a standard publication practice.

In light of the well-versed rationales and the recommendations in this paper and my alignment with the preference for reproducible research, I do not have any major "concerns" overall. Before going to minor technical comments, I would like to share some general thoughts that may interest the authors to discuss or further develop in the revised manuscript.

1. In the section Are results reproducible?

Although each R in the 4R guidelines is indispensable, the intrinsic demands are increasing from the first to the last R. A fair and feasible implementation of these principles could vary by discipline/subdisciplines. Reproducible results have, of course, the uttermost importance and should be ensured whenever possible. Yet, in some cases, fully reproducing results is practical.

For example, evolutionary biology has relied on and/or gradually will rely more on insights obtained from high-throughput sequencing (comparative genomics, transcriptomics, and other omics). Due to the nature of its high-volume, sometimes high-dimensionality, computations involved are expansive not only in terms of computational time but also involves in the accessibility and availability of resources, including High-Performance Cluster (HPC), # of CPUs, memories, and storage. If reviewers were required to reproduce results in the peer review process, installation and configuration of a substantial fraction of bioinformatic software are not trivial, even if resources are provided/subsidized for the reviewer.

One possible solution to this (which may be outside the scope of this paper) is to watermark (e.g., using MD5 sum) all intermediate results during the computation and only examine the reproducibility of the "scaled-down" version of results.

2. In the section Is the code Reliable

Besides the reasons mentioned by the authors, many errors in code could often appear in user-defined analysis or helper functions. Toy examples, if not unit tests in the engineering fields, should at least be included to test if those functions are doing what they are expected to do.

3. On incentives for code-review club and reviewers

As most researchers are scientists (some may be statisticians) by training, requiring them to know and implement the best practices in coding could be too demanding, especially for the first author, who happens to be a bench scientist working on a highly independent project. Besides recommendations proposed by this paper (e.g., code review club, discussing up front potential authorship for code reviewers), I would go beyond and argue that code reviewers are entitled to authorship, especially if they contributed significantly in making code reviewable (Fig. 3)

On the other hand, given the current unfair workload that reviewers commonly face, reviewers should be offered the authorship opportunity as one of the incentives, especially if fact-checking is time-consuming. Although (again) may be out of the scope of this paper, this could be facilitated by journals that adopt a double-blind reviewing process. Authors' consent on granting anonymous reviewers should be asked before the manuscript is sent out to external reviewers, and editors must withhold the information from reviewers until the final acceptance decision on the manuscript to ensure the objectivity of reviewing process. To mitigate the possibility that authors are exploiting the reviewer's fact-checking work, authors should also agree that the reviewer will be entitled to authorships if they (1) find major discrepancies in the code that change the direction of the corresponding conclusions, (2) provides the evidence of their workflow (3) authors subsequently can reproduce the issues that reviewers postulated.

As the authors pointed out, designated "Data Editors" have become standard practices in some journals. Designated "Data Reviewers" may be on the horizon in the future. In such cases, authorship to data reviewers as incentives could be discussed, and it might be favorable not to grant authorship to data reviewers if they do not assess the manuscript's scientific novelty.

Minor comments:

4. Consider switching the orders of Fig.2 and Fig. 3 and updating the in-text reference

5. Line 183-184 https://github.com/pditommaso/awesome-184pipeline not found

6. Line 276-277 https://github.com/SORTEE/peer-277code-review/issues/8 not found

7. Line 352: add in-text reference "(Fig. 1)" after if code is indeed adhering to the R’s listed above.

8. Line 358: missing full citation of Stodden 2011, Light et al., 2014

9. Fig 1: "Code must be error-free": the language is vague, and I suggest revising it to "Is the code doing what it is supposed to do?"

Reviewed by Serena Caplins, 07 Jul 2023

The manuscript describes a process by which code-review in the field of ecology and evolution could take place. The authors suggest following the 4 R's (Reported, Run, Reliable, Reproducible).

Throughout the paper the authors provide some suggestions for reproducibility and effective managment of package versions. A tool not yet mentioned that my be useful is the R package "packrat" which stores packages specific to the version as they are installed, can reload them across different machines and can be shared across users. I recommend adding this type of package to the manuscript as this (version control/reproducibility) is what it was built for. It would be nice to see a packrat snapshot submitted along with code to fully reproduce an analyses.

Another tool which can greatly aid in reproduciblity is containers and/or the containerization of a research project. Here is a paper describing their use in data science https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1008316

And more information on the reproducibility of containers here: https://carpentries-incubator.github.io/docker-introduction/reproduciblity/index.html

Beyond that I found the paper to be well written and well structured and feel it will be a helpful guide for the community. Congratulations to the authors and thank you for putting this together!

I would love to see journals take code review serieously and hire someone to code review alongside the work performed by the editors and reviewers. Perhaps some of the society journals should take the lead here?