based on reviews by Maxime Dahirel and 1 anonymous reviewer

based on reviews by Maxime Dahirel and 1 anonymous reviewer

Behavioral flexibility, i.e. the “ability to adapt behavior to new circumstances through packaging information and making it available to other cognitive processes” (Logan et al. 2023), appears as one of the crucial elements of responses of animal species to changing environments. Behavioral flexibility can change within the life of individuals, depending on their experience on the degree of variability and predictability of their surrounding environment. But little is known on the cognitive processes involved in these temporal changes in behavioral flexibility within individuals.

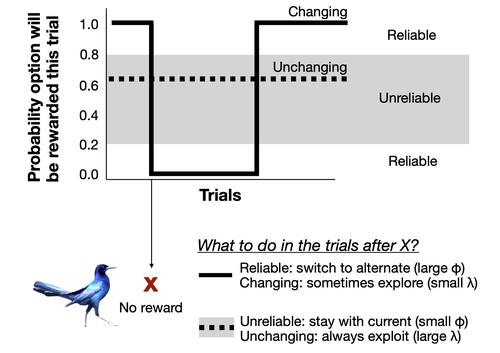

This is what Lukas et al. (2024) investigated very thoroughly, using the framework of serial reversal learning experiments on great-tailed grackles to study different aspects of the question. Behavioral flexibility as involved in serial reversal learning experiments was previously modeled as being made of two primary parameters: the rate of updating associations, phi (i.e. how fast individuals learn the associations between a cue and its associated reward or danger); and the sensitivity to the learned associations, lambda (i.e. how strong do individuals make their choices based on the associations they learned).

Lukas et al. (2024)* used a Bayesian reinforcement model to infer phi and lambda in individuals going through serial reversal learning experiments, to understand which of these two parameters explains most of the variation in grackle performance in serial reversal learning, how correlated they are, how they can change along time depending on an individual’s experience, how variable they can be among individuals, and whether they can predict performance in other contexts. But beforehand, the authors used an individual-based model to assess the ability of the Bayesian reinforcement model to correctly assess phi and lambda in their experimental design. They also used the Bayesian model to infer the range of values of phi and lambda an individual needs to exhibit to reduce errors in the serial reversal learning experiment.

Among other results, this study shows that in a context of rapidly changing but strongly reliable cues, the variation in the success of grackles is more associated with the rate of updating associations (phi) than the sensitivity to learned associations (lambda). Besides, phi increased within individuals along the serial reversal learning experiment, while lambda only slightly decreased. However, it is very interesting to note that different approaches could be adopted by different individuals through the training, leading them eventually to the same final performance: slightly different combinations of changes in lambda and phi lead to different behaviours but compensate each other in the end in the final success rate.

This study provides exciting insights into the cognitive processes involved in how changes in behavioral flexibility of individuals can happen in this type of serial learning experiments. But it also offers interesting openings to understand the mechanisms by which behavioral flexibility can change in the wild, helping individuals to cope with rapidly changing environments.

* Lukas et al. (2024) presents a post-study of the preregistered study Logan et al. (2019) that was peer-reviewed and received an In Principle Recommendation for PCI Ecology (Coulon 2019; the initial preregistration was split into 3 post-studies). A pre-registered study is a study in which context, aims, hypotheses and methodologies have been written down as an empirical paper, peer-reviewed and pre-accepted before research is undertaken. Pre-registrations are intended to reduce publication bias and reporting bias.

References

Coulon, A. (2019) Can context changes improve behavioral flexibility? Towards a better understanding of species adaptability to environmental changes. Peer Community in Ecology, 100019. https://doi.org/10.24072/pci.ecology.100019

Logan, CJ, Lukas D, Bergeron L, Folsom M, McCune, K. (2019). Is behavioral flexibility related to foraging and social behavior in a rapidly expanding species? In Principle Acceptance by PCI Ecology of the Version on 6 Aug 2019. http://corinalogan.com/Preregistrations/g_flexmanip.html

Dieter Lukas, Kelsey B. McCune, Aaron P. Blaisdell, Zoe Johnson-Ulrich, Maggie MacPherson, Benjamin M. Seitz, Augustus Sevchik, Corina J. Logan (2024) Bayesian reinforcement learning models reveal how great-tailed grackles improve their behavioral flexibility in serial reversal learning experiments. ecoevoRxiv, ver.4 peer-reviewed and recommended by Peer Community in Ecology https://doi.org/10.32942/osf.io/4ycps

DOI or URL of the preprint: https://doi.org/10.32942/osf.io/4ycps

Version of the preprint: 3

Dear Aurélie Coulon, Maxime Dahirel, and anonymous reviewer,

Thank you for the continued constructive feedback on our revised manuscript! We addressed the requested edits, and reply to the comments below (in bold). We agree that this process improved our manuscript!

In addition to the version with tracked changes that we uploaded at the PCI Ecology website, here are links to alternative versions of the article, depending on which you prefer:

-PDF at EcoEvoRxiv (version 4): https://ecoevorxiv.org/repository/view/3689/

-rmd file with the text and the code (version-tracked): https://github.com/corinalogan/grackles/blob/master/Files/Preregistrations/g_flexmanip2post.Rmd

Thank you so much for all of your help throughout this whole research process!

All our best,

Dieter Lukas (on behalf of all co-authors)

Revision round #3

Decision for round #3 : Revision needed

Minor revision needed

Dear Dr Lukas and collaborators,

The two reviewers who evaluated the previous version of your ms have sent comments on the revised version of your preprint entitled “Bayesian reinforcement learning models reveal how great-tailed grackles improve their behavioral flexibility in serial reversal learning experiments”.

Both of them are happy with the way you took their previous comments into account and your ms has now substantially improved. They made a number of minor suggestions that I recommend you take into account so that your ms is fully ready for a recommendation by PCI Ecology.

Best,

Aurélie Coulon,

Recommender, PCI Ecology.

by Aurélie Coulon, 17 Jul 2024 13:58

Manuscript: https://doi.org/10.32942/osf.io/4ycps

version: 3

Review by Maxime Dahirel, 10 Jul 2024 11:14

I have read the newly-revised version of the manuscript “Bayesian reinforcement learning models reveal how great-tailed grackles improve their behavioral flexibility in serial reversal learning experiments” by Lukas et al.

I mentioned in my previous review that I did not have any meaningful comments left on the substance, so my remaining comments were on the form. The authors have taken my previous comments into account, resulting in a much clearer manuscript on which I have not much to say. There are still a few points left where they slipped in their formatting, and I have tried to point them all. In a couple cases, the revision effort may have added new points needed clarification; I have tried to signal them all too.

Please see my comments below:

(1) Line 28: “in this experiment” is ambiguous, since the experiment in question is not mentioned before in the Abstract

Reply 1.1: We replaced this with “during serial reversal learning experiments” to clarify what the approach refers to.

(2) In the Introduction, I feel like the paragraphs starting line 75 and line 95 may work better if switched?

Reply 1.2: We did not switch the paragraphs because we think it is helpful to first have a description of what serial reversal learning experiments are (lines 77ff) before going into the details of which processes individuals might use during these experiments. To avoid repetition, we already provide the specific description of the experiments we used here, rather than first providing a generic description followed by a more specific explanation of the setup used here later.

(3) Lines 83-87: are these sentences really necessary in an Introduction?

Reply 1.3: As mentioned above, we think that it is helpful to provide a detailed description of what the birds experienced because this informs the considerations about the processes that individuals might use during these experiments.

(4) Line 123: You mention that lambda = 0 has a specific meaning. However, you fit models where lambda is estimated on a log link, so can never be equal to zero. Have you considered this discrepancy when implementing your model? (Note that this may be nothing wrong; lambda = 0 may well be a limit case that can never be reached, only approached)

Reply 1.4: Good point. In theory, there could be individuals whose lambda = 0 and who therefore pick options at random. However, to be included in our analyses, a bird had to reach the criterion of consistently choosing the rewarded option. A bird with a lambda = 0 would never reach this criterion, so we know that none of the birds included in our analyses had a lambda = 0. We added this explanation in the method section: “The limitation that, with an estimation on the log-scale $\lambda$ can never be equal to 0, is not an issue because we only included individuals in the analyses who did not pick options at random.”

(5) Equation placement: equations 1-3 and especially 1 are called in the text long before they appear (see e.g. line 113). While there are cases here this could be valid, usual practice is usually to present equation the first time they’re called. Please consider whether or not the way you present your reasoning warrants departing from this practice

Reply 1.5: To avoid confusion, we removed the direct references to the equations from the introduction and instead state “for details on the calculations see the section on the reinforcement learning model in the Methods”

(6) Line 325: priors are set on log(lambda) and logit(phi), not lambda and phi. The distinction is important and should be made clear

Reply 1.6: We changed the statement about the prior to “We set the priors for the logit of $\phi$ and the log of $\lambda$ to come from a normal distribution with a mean of zero and a standard deviation of one.”

(7) Line 362, but also later: strictly speaking, there is an argument that can be made that these linear model equations should also count towards the equation numbering

Reply 1.7: We discussed this, also based on a previous comment by the other reviewer. We decided not to number the formula for the statistical models because we were looking to McElreath (2020) as guidance, and in there these formulae are also not numbered.

(8) Line 362, and a lot of places elsewhere through the manuscript: the letter “x” is not a valid substitute for the multiplication sign (a.k.a. \times in LaTeX notation) in equations

Reply 1.8: Thank you for providing the LaTeX notation! We have changed this in all the equations.

(9) Line 368: mu should be in Greek, not Latin here. Please check for other similar issues

Reply 1.9: Thank you for noticing this. We checked all the notations again.

(10) Line 450: MVNormal should not be in italic, just as Normal isn’t is the same set of equations

Reply 1.10: Thank you, we changed MVNormal to be not italic.

(11) Line 491: there is a line break missing, I think?

Reply 1.11: Yes, thank you, we fixed this.

(12) Line 603: “reversal”, not “revrsal”

Reply 1.12: We corrected this.

(13) Line 608-609, but also elsewhere: there are mentions of lambda/phi being big or small throughout the text, but I don’t think I saw any point of reference for what would be a meaningfully small or large value?

Reply 1.13: In the introduction, we explain that that phi ranges between zero and one, and what these extreme values represent. Similarly, we explain that lambda has to be zero or larger, but that there is no upper bound. We removed the reference to small in this line and only report the values, and in the legend of Figure 3 repeat the limits that these values can take as a reference.

(14) Line 742: there is support for a U-shaped relationship, but the compatibility intervals are also compatible with horizontal straight lines (see Figures). This should be acknowledged

Reply 1.14: The different values across the range of the compatibility interval along the x-axis are not independent of each other. If, instead of the intervals, we would plot a sample of multiple curves from the posterior, these curves would span the same area as is shown in the compatibility intervals. However, each curve would show a U-shape, because the estimate for this parameter does not cross zero. This means that the estimates from the posterior are not compatible with horizontal straight lines.

(15) Figure 6: The figure (which uses full and broken lines) and its legend (which uses dots and squares) do not match each other

Reply 1.15: We changed the legend to match the figure.

Review by anonymous reviewer 1, 27 Jun 2024 13:40

Dear recommender and authors,

I have read the latest (version 3) of the paper “Bayesian reinforcement learning models reveal how great-tailed grackles improve their behavioral flexibility in serial reversal learning experiments” by Lukas and colleagues.

This version has been greatly improved since I first read it, and the authors have addressed most, if not all, of my criticisms (and those of the other reviewer, who also did an incredible job of providing feedback I must say). The abstract succinctly summarises the research objectives and results in a very understandable way, the introduction sets up the background well, the methods and results are now clearer (good job on the formatting of the equations and their explanation), and although I would still have put the "model test" as an appendix, the way it is now presented, inserted into the main text, and the way simulations are confronted with empirical data really adds up. So I would like to congratulate the authors for their persistence, their patience with my comments (and sometimes erroneous comments they had to answer anyway!), and especially for the final result. As a side note, here are some very minor comments/typos that may be adjusted as a last (and short) revision.

I hope I have not overlooked any other inconsistencies,

Thank you for allowing me to be part of this fascinating study,

Sincerely yours.

Comments:

L30: I would add “past” experiences.

Reply 2.1: We added “past” in line 40.

L49: I would consider removing “in another conception”.

Reply 2.2: We decided to keep this phrase to highlight the conceptual differences that appear to be present in the literature.

L92-93: After such a summary of the findings so far, I would have appreciated a short statement (one sentence) on what still needs to be explored (bridging with the following paragraph highlighting what is generally missing in any previous studies).

Reply 2.3: We added the following bridge sentence “To understand these changes in behavioral flexibility, we need approaches that can reflect how individuals might update their cognitive processes based on their experience.”

L109 and 119: I would delete the statement "the Greek letter...".

Reply 2.4: We decided to keep the statement for readers who might not be as familiar with mathematical notation, given that phi and lambda are key characters that we refer to repeatedly.

L232: Equation 2 is referenced, whereas Equation 1 was not. In general, the equation should also match the citation order. However, I can understand that this is not convenient in this case. Perhaps the authors will find a way.

Reply 2.5: We decided to refer to the equations at the point when we explain them (see also Reply 1.5). Here, we changed the text to “Choices during trials were based on the associations that individuals formed between each option and the reward based on their experience...Based on their choices, individuals updated their internal associations with the two options based on their individual learning rate.”

L325: For the sake of practicality, the fitting of λ and φ was done to transformed parameters in the modelling. However, in the current writing of the statistical equations, it is difficult to understand whether the authors are modelling/using the transformed/untransformed parameters (I, therefore, suddenly doubted why Gaussian models were used rather than beta models, and had to refer to the codes to allay these doubts). Perhaps labelling them as λ' and φ' when using the transformed parameters might be useful.

Reply 2.6: We changed the text to explain when we refer to logit phi and log lambda (see Reply 1.6).

L603: There is a typo "revrsal" should be "reversal" (e missing)

Reply 2.7: Thank you, we fixed this.

Figure 3: If I am not mistaken, the number of "Grackles first" does not match the sample size in the text. Are some points overlapping? I also suspect that the x-axis is cut off too early, because if I read Figure 4 correctly, a grackle is at a φ > 0.1.

Reply 2.8: As the legend explains, “The figure also shows the median 𝜙 and 𝜆 values estimated for the trained grackles”. The trained grackles are the eight individuals who experienced the serial reversal learning experiment. We focus on these individuals to show how the values changed during the serial reversal learning experiment. Figures 4 and 5 show the values for the full range of individuals.

L653: The reference to Figure 4 should in this case refer to panels a) and b). Otherwise, I think it would be more appropriate to refer to Figure 5.

Reply 2.9: The reference here was to the pattern in Figure 4 that in panel a), the number of trials increases from left to right, in panel b) the phi values decrease from left to right, and in panel c) there is no pattern in the lambda values from left to right. We were therefore referring to the comparison across all three panels, which is why we had referred directly to Figure 4. Figure 5 only shows the pattern for phi, but not for lambda. We added this explanation of the pattern to the references in this line “Figure 4, increase left to right in panel a), decrease in panel b), no pattern in panel c)”.

L715-716: I do not understand these sentences as they are written here, although I can see from the Figure 6 legend what the authors are referring to. I would encourage the authors to expand a bit here (as they have done in the figure legend) or simply remove it and leave it to the figure legend.

Reply 2.10: We added further explanations in the text “We illustrate how these differences in $\phi$ and $\lambda$ lead to slightly different ways of reaching the passing criterion during the final reversal. We used the values from the two individuals at the ends of the spectrum, the one with the highest $\phi$ and lowest $\lambda$, and the one with the lowest $\phi$ and highest $\lambda$, to explore how individuals switched from the previous option to the option that is now being rewarded.”

L719: If one had to be really picky, this is more of a magenta line than a red line (and this applies to other cases as well). Maybe use the standard red colour in this case, to avoid confusing non-colour-blind people?

Reply 2.11: We had changed the colour away from pure red based on a comment of a colour-blind co-author. We therefore keep the colour-blind friendly option, but changed the legend to refer to magenta.

L911: You miss the “on”: “for their constructive feedback ON…”

Reply 2.12: We changed this.

, posted 17 Jul 2024, validated 19 Jul 2024

, posted 17 Jul 2024, validated 19 Jul 2024Dear Dr Lukas and collaborators,

The two reviewers who evaluated the previous version of your ms have sent comments on the revised version of your preprint entitled “Bayesian reinforcement learning models reveal how great-tailed grackles improve their behavioral flexibility in serial reversal learning experiments”.

Both of them are happy with the way you took their previous comments into account and your ms has now substantially improved. They made a number of minor suggestions that I recommend you take into account so that your ms is fully ready for a recommendation by PCI Ecology.

Best,

Aurélie Coulon,

Recommender, PCI Ecology.

, 10 Jul 2024

, 10 Jul 2024I have read the newly-revised version of the manuscript “Bayesian reinforcement learning models reveal how great-tailed grackles improve their behavioral flexibility in serial reversal learning experiments” by Lukas et al.

I mentioned in my previous review that I did not have any meaningful comments left on the substance, so my remaining comments were on the form. The authors have taken my previous comments into account, resulting in a much clearer manuscript on which I have not much to say. There are still a few points left where they slipped in their formatting, and I have tried to point them all. In a couple cases, the revision effort may have added new points needed clarification; I have tried to signal them all too.

Please see my comments below:

(1)

Line 28: “in this experiment” is ambiguous, since the experiment in question is not mentioned before in the Abstract

(2)

In the Introduction, I feel like the paragraphs starting line 75 and line 95 may work better if switched?

(3)

Lines 83-87: are these sentences really necessary in an Introduction?

(4)

Line 123: You mention that lambda = 0 has a specific meaning. However, you fit models where lambda is estimated on a log link, so can never be equal to zero. Have you considered this discrepancy when implementing your model? (Note that this may be nothing wrong; lambda = 0 may well be a limit case that can never be reached, only approached)

(5)

Equation placement: equations 1-3 and especially 1 are called in the text long before they appear (see e.g. line 113). While there are cases here this could be valid, usual practice is usually to present equation the first time they’re called. Please consider whether or not the way you present your reasoning warrants departing from this practice

(6)

Line 325: priors are set on log(lambda) and logit(phi), not lambda and phi. The distinction is important and should be made clear

(7)

Line 362, but also later: strictly speaking, there is an argument that can be made that these linear model equations should also count towards the equation numbering

(8)

Line 362, and a lot of places elsewhere through the manuscript: the letter “x” is not a valid substitute for the multiplication sign (a.k.a. \times in LaTeX notation) in equations

(9)

Line 368: mu should be in Greek, not Latin here. Please check for other similar issues

(10)

Line 450: MVNormal should not be in italic, just as Normal isn’t is the same set of equations

(11)

Line 491: there is a line break missing, I think?

(12)

Line 603: “reversal”, not “revrsal”

(13)

Line 608-609, but also elsewhere: there are mentions of lambda/phi being big or small throughout the text, but I don’t think I saw any point of reference for what would be a meaningfully small or large value?

(14)

Line 742: there is support for a U-shaped relationship, but the compatibility intervals are also compatible with horizontal straight lines (see Figures). This should be acknowledged

(15)

Figure 6: The figure (which uses full and broken lines) and its legend (which uses dots and squares) do not match each other

https://doi.org/10.24072/pci.ecology.100468.rev31Dear recommender and authors,

I have read the latest (version 3) of the paper “Bayesian reinforcement learning models reveal how great-tailed grackles improve their behavioral flexibility in serial reversal learning experiments” by Lukas and colleagues.

This version has been greatly improved since I first read it, and the authors have addressed most, if not all, of my criticisms (and those of the other reviewer, who also did an incredible job of providing feedback I must say). The abstract succinctly summarises the research objectives and results in a very understandable way, the introduction sets up the background well, the methods and results are now clearer (good job on the formatting of the equations and their explanation), and although I would still have put the "model test" as an appendix, the way it is now presented, inserted into the main text, and the way simulations are confronted with empirical data really adds up. So I would like to congratulate the authors for their persistence, their patience with my comments (and sometimes erroneous comments they had to answer anyway!), and especially for the final result. As a side note, here are some very minor comments/typos that may be adjusted as a last (and short) revision.

I hope I have not overlooked any other inconsistencies,

Thank you for allowing me to be part of this fascinating study,

Sincerely yours.

Comments:

L30: I would add “past” experiences.

L49: I would consider removing “in another conception”.

L92-93: After such a summary of the findings so far, I would have appreciated a short statement (one sentence) on what still needs to be explored (bridging with the following paragraph highlighting what is generally missing in any previous studies).

L109 and 119: I would delete the statement "the Greek letter...".

L232: Equation 2 is referenced, whereas Equation 1 was not. In general, the equation should also match the citation order. However, I can understand that this is not convenient in this case. Perhaps the authors will find a way.

L325: For the sake of practicality, the fitting of λ and φ was done to transformed parameters in the modelling. However, in the current writing of the statistical equations, it is difficult to understand whether the authors are modelling/using the transformed/untransformed parameters (I, therefore, suddenly doubted why Gaussian models were used rather than beta models, and had to refer to the codes to allay these doubts). Perhaps labelling them as λ' and φ' when using the transformed parameters might be useful.

L603: There is a typo "revrsal" should be "reversal" (e missing)

Figure 3: If I am not mistaken, the number of "Grackles first" does not match the sample size in the text. Are some points overlapping? I also suspect that the x-axis is cut off too early, because if I read Figure 4 correctly, a grackle is at a φ > 0.1.

L653: The reference to Figure 4 should in this case refer to panels a) and b). Otherwise, I think it would be more appropriate to refer to Figure 5.

L715-716: I do not understand these sentences as they are written here, although I can see from the Figure 6 legend what the authors are referring to. I would encourage the authors to expand a bit here (as they have done in the figure legend) or simply remove it and leave it to the figure legend.

L719: If one had to be really picky, this is more of a magenta line than a red line (and this applies to other cases as well). Maybe use the standard red colour in this case, to avoid confusing non-colour-blind people?

L911: You miss the “on”: “for their constructive feedback ON…”

https://doi.org/10.24072/pci.ecology.100468.rev32

DOI or URL of the preprint: https://doi.org/10.32942/osf.io/4ycps

Version of the preprint: 2

Dear Aurélie Coulon, Maxime Dahirel, and anonymous reviewer,

Thank you very much for the constructive comments on our revised manuscript! We appreciate the continued support to turn these additional analyses into a separate manuscript. Based on your feedback, we have now (1) changed the introduction to set a clearer frame that is building on the previous findings, (2) restructured the methods to link the different approaches to the questions they address, (3) changed the mathematical notation to make it consistent, and (4) checked the wording and presentation throughout. Please find our detailed replies to the comments below (in bold).

In addition to the version with tracked changes that we uploaded at the PCI Ecology website, here are links to alternative versions of the article, depending on which you prefer:

-PDF at EcoEvoRxiv (version 3): https://ecoevorxiv.org/repository/view/3689/

-rmd file with the text and the code (version-tracked): https://github.com/corinalogan/grackles/blob/master/Files/Preregistrations/g_flexmanip2post.Rmd

Thank you so much for all of your help throughout this whole research process!

All our best,

Dieter Lukas (on behalf of all co-authors)

Decision for round #2 : Revision needed

Revision needed

Dear Dr Lukas and collaborators,

Two reviewers have evaluated your preprint entitled “Bayesian reinforcement learning models reveal how great-tailed grackles improve their behavioral flexibility in serial reversal learning experiments”. They were the same who reviewed the first version of this ms you previously submitted to PCI Ecology.

Both reviewers acknowledge the effort you made to take their comments into account and think your manuscript is easier to read. However, both have the same conclusion that there are still issues which make the paper very difficult to follow. They made substantial and detailed comments and suggestions regarding the organization of the ms, the presentation of ideas, concepts and methods, and equation formatting. I recommend that you closely follow those proposals, so that your manuscript can better highlight the outcomes of your study.

Best,

Aurélie Coulon,

Recommender, PCI Ecology.

by Aurélie Coulon, 04 Apr 2024 12:33

Manuscript: https://doi.org/10.32942/osf.io/4ycps

version: 2

Review by Maxime Dahirel, 06 Mar 2024 11:49

I have read the revised version of the manuscript now entitled “Bayesian reinforcement learning models reveal how great-tailed grackles improve their behavioral flexibility in serial reversal learning experiments” by Lukas et al. Overall, I’m very satisfied with the way the authors have reworked their paper in response to our comments. The improvements are substantial and I commend the authors for the amount of work which must have been done, and I don’t have anything left to say about the substance of the manuscript. As such, all the comments I have left are about form or formatting, especially equation formatting. There are much, much fewer of them than in the previous version (the manuscript is also improved on that side!), but there are still there.

Importantly though, please do not consider the fact my comments are only of form now to handwave them as minor style disagreements; in a dense, concept- and equation-heavy manuscript such as this one, clarity is paramount, guesswork should be avoided, and there are still quite a few points in the manuscript where I had to guess.

R1 Reply: Thank you for your patience and diligence! Having this level of statistical detail in an article is new to us, so we appreciate the guidance on how to present this in an accurate way.

Please see my detailed comments below:

R1 COMMENT 1 - [this one is more of a remark or advice for the future, and can be safely ignored for the purposes of this revision if the authors do not want to re-run anything]

In my previous review, I mentioned the need of accounting for the uncertainty in phi and lambda when using them as predictors in subsequent models. I suggested several methods to do so, and the authors chose one, which I’m OK with. However, they noted in their reply that:

“The estimated phi and lambda values are predictor, not outcome variables, which is why the measurement error model does not appear appropriate in this case”.

This is actually not an issue; error-in-predictors models exist, and can be fitted easily with Stan. See ch. 15.1.2 in (McElreath, 2020), or the documentation of the brms package for examples of implementations.

R1 Reply 1: Thank you for the clarification. That will be helpful for future analyses. We had interpreted the models in the examples in ch 15.1.2 in McElreath 2020 as instances where there was a single measurement for each predictor variable, which is associated with an unknown error that is estimated as uncertainty in the model. This to us seemed different from the case here where we have multiple measurements for each predictor variable that reflect the uncertainty in the estimation. That is why we chose the alternative approach, and it is good to hear that our chosen approach addresses the problem you previously raised.

R1 COMMENT 2 - Please check the correct formatting of literature references throughout the text. In several cases, first/middle name initials appear when they shouldn’t (which may be rooted in the formatting in the bib file itself), and I’ve seen a few “(“ parentheses closed with “]” brackets rather than “)” ,or vice-versa. Examples can be found lines 61 or 863-865, but please check carefully everywhere. Also, at least one reference is left as its BibTex citation key rather than properly formatted (line 211, likely an @ missing in the raw Markdown). Finally, please note that in the References list at the end of the manuscript, the csl style that is used appears to auto-add “https://doi.org/” to whatever is in the URL/DOI field, leading to things like “https://doi.org/https://doi.org/10.1038/npp.2017.108” which while mostly harmless (the internal link still points to the right place) don’t look good.

R1 Reply 2: Thank you so much for catching all of these! We fixed the brackets so the in text citations show up properly, and we changed the .csl file so author initials don’t show up in the in text citations. The double doi.org in the URLs in the references was an error we made in the bibtex file, which we now fixed.

R1 COMMENT 3 - In a few places sentences may run a bit too long, and would benefit from added commas at least, and maybe being split in two in some cases. This one is extremely subjective as there are no egregious examples, so I am leaving it fully to the authors’ appreciation.

R1 Reply 3: We have gone through the text and tried to split sentences wherever possible.

R1 COMMENT 4 - There are several equation notation inconsistencies between batches of equations, but also within batches. While individually they are not catastrophic, their accumulation makes understanding what’s happening much, much more difficult that it ought to be. I again strongly recommend the authors follow a style guide; I suggest (Edwards & Auger‐Méthé, 2019) for an explicit one, but an implicit style such as the one in (McElreath, 2020) is fine as long as it is followed consistently.

Below are the main formatting “infractions” to convention I noticed. Please, please note that the specific line examples I give are examples and are not an exhaustive list, and the authors should go through the notation with a very fine comb again:

R1 Reply 4: thank you so much for the advice! The Edwards & Auger‐Méthé, 2019 reference is amazing! We went through and updated all of the equations and models so they are consistent within and between, per this comment and comments 4.1 through 4.10. For comment 4.7, we were able to put it in matrix notation as you suggested - thanks very much for this.

R1 COMMENT 4.1 - Inconsistent use and styling of indices (i,j,t…): sometimes they are as subscript, sometimes not; sometimes they are in brackets, sometimes not. This becomes especially problematic when word indices (bird, first, last) start to be used rather than only single letter one. Much more importantly for the comprehension of the equations, in some cases (especially the GLMMs later on) they are absent when they should be present (see e.g. equation block starting line 427, where I am fairly sure that several parameters are bird-specific (including phi, lambda, mu…) but do not have a bird index, when other have.

R1 COMMENT 4.2 - Use of different indices to mean the same thing. The “individual bird” index switches from “j” (Bayesian reinforcement model description) to “bird” (GLMM equation starting line 428) to “i” (line 517) after several equations of being forgotten (cf problem above)

R1 COMMENT 4.3 - Use of the same index to mean different things. “i” is used to denote an option in the Bayesian reinforcement model description, but an individual grackle later on (see above).

R1 COMMENT 4.4- Inconsistent or seemingly inconsistent styling of parameters: line 428, a and b are both italicized and not, on the same line. While this may refer to the fact the italicized version is a bird-specific parameter while the non-italicized is a population parameter, this is an extremely unclear styling choice, and it would be better to make another (Roman a for bird-specific and Greek alpha for population, for instance)

R1 COMMENT 4.5 - Missing line breaks in equation blocks (e.g. lines 428, 444)

R1 COMMENT 4.6 - Matrix notation at odds with convention (Rho and S not in bold lines 428-430)

R1 COMMENT 4.7 - Incorrect notation for the decomposition of the covariance matrix S into the diagonal matrices containing the SDs and the correlation matrix Rho (line 429). See 14.1.3 in (McElreath, 2020) for an example of correct notation

R1 COMMENT 4.8 - Greek letters written as plain Roman beyond the first mention cases the authors specifically discussed in their response (Rho line 430, lambda lines 753/762, phi line 803 and figure 7, the lambda ones being clearly LaTeX typos)

R1 COMMENT 4.9- Use of “code-like” notation in the middle of mathematical equations. For instance, the use of “*”, rather than “×” (line 428 and below), the persistence of “^” to denote superscript/power rather than actual superscripting, or the use of “dnorm()” when “Normal()” is meant (line 503 and below).

R1 COMMENT 4.10 - Not following the convention that equations should be part of sentences. An extremely minor one compared to the above, but this results in missing commas or periods, and in unnecessary repetitions. For instance, lines 248-249 or 260-261 could be simplified if following that convention.

R1 COMMENT 5 - Something that may or may not be related to COMMENT 4:

In the description of the Bayesian reinforcement model, the authors mention that phi and lambda are estimated on the logit and log-scale, respectively.

First, my understanding is that it’s simply because phi is a proportion (given its use in a 1- phi equation line 248) and lambda is strictly positive, so fitting the model and setting priors ultimately to logit(phi) and log(lambda) instead is a natural way to respect these constraints during estimation, and usual practice. However, that’s a slightly different rationale to the one mentioned by the authors line 280-282. For instance, I’m not sure the exponentiation in Eq. 2 line 260 has anything to do with lambda being estimated on the log-scale. If it actually does, then I missed something /something is missing in the description of the model, and log(lambda), not lambda should be what’s in Eq. 2.

And that’s where we arrive to the idea this comment may be related to COMMENT 4 about equation clarity. In this block of equations, and maybe all the following ones, it’s unclear where and when the authors mean to refer to phi/lambda or to logit(phi)/log(lambda) in their equations. Being explicit about it rather than implicit would greatly help understanding.

R1 Reply 5: Apologies for the confusions. You are correct, in the model phi and lambda are estimated as logit(phi) and log(lambda) to reflect the constraints on the possible values that they can assume. The exponentiation in Equation 2 is independent of this, it reflects the ‘normalized exponential’ (or softmax) function to convert the values into a probability distribution of the two outcomes. We have changed the text accordingly to explain both the estimation of the parameters and the exponentiation in the equation.

R1 COMMENT 6 - This is an unusual parameter choice for the Gamma-Poisson aka Negative Binomial, line 516 (reminder that the two terms refer to the same distribution).

First the choice of a discrete count model for a latency (continuous even if measured to a discrete amount of time) is unusual. Second, setting that aside, in most mu-based parametrisations of the Negative Binomial I’m familiar with, mu refer to the mean count, so higher mu mean higher values, contrary to what the authors state line 526. And the second parameter, while linked to overdispersion, is not equal to the SD, so it is usually not called sigma, to avoid confusion. (see COMMENT 4).

At this point, I’m not clear if this is simply a notation issue, a parametrization issue (this distribution can be parametrized with mean and precision, mean and (over)dispersion, or with the Gamma shape and scale parameters), or something else, like a previous reference using “Gamma-Poisson” to mean something different to what is usually meant in the literature. In any case, some clarification is needed, possibly with a reference.

R1 Reply 6: Sorry about the parameter confusion! The Gamma-Poisson parameters are usually phi and lambda, but we wanted to avoid those because they are used in a different context in this article. We can see how mu and sigma are confusing here though because that is standard notation for a normal distribution. We changed these parameters to m and s. We also fixed the explanations. We had initially thought of reflecting latency as the inverse of a rate of something happening: if something happens a lot, the latency until it happens again will be shorter. However, you are correct in that we implemented a model where we estimate latency directly, rather than indirectly from what happens. In this case, higher rates (counts) means more seconds, and therefore a longer latency. We had interpreted the outcomes as such in the results and in discussion, but not changed the explanation in the methods accordingly. We also clarify that the second parameter is linked to the variation in the overall distribution. We had chosen the Gamma-Poisson because we considered our measure of latency a count of seconds (Poisson), with a skewed distribution because the mean rate of waiting differs among birds (Gamma). We have added these explanations for the choices to the description of the model.

R1 COMMENT 7 - The authors consistently use the term “correlation” to refer to parameters that are emphatically not correlations, but instead slopes/coefficients from their models (see paragraph starting line 667 for some of the most flagrant examples, but this is present throughout the Results). Correlations are bound between -1 and 1, while slopes/coefficients from a model linear part are not.

R1 Reply 7: Thank you for pointing out the distinction between correlations and correlation coefficients. We have changed the presentation of the results throughout (“here and hereafter, we report the posterior mean slope of the association with the 89% compatibility interval”). We still use the term correlation when describing that, overall, two variables are negatively or positively statistically associated.

MINOR COMMENTS

R1 C8 - Line 29: species name should be italicized

R1 C9 - Line 69: “greek" should be “Greek”, capitalized

R1 C10 - Line 267: should be “P” italicized, not “*P” non-italicised; seems to be a Markdown typo

R1 Reply 8-10: We fixed these, thank you!

R1 C11 - Line 292: the authors say they use the posterior package to sample the model posterior, but do not describe the number of samples/chain in the initial model they are sampling from (contrary to later on)

R1 Reply 11: We added the information that we ran 4 chains with 2000 samples each.

R1 C12 - Line 317-318: maybe make it clearer that it is one run of simulations (there are 640 simulated individuals, not e.g. 640 * 1000 simulations).

R1 Reply 12: We changed the description of the agent-based model, and clarified that for a total of 640 individuals we simulated once their choices during a reversal learning experiment.

R1 C13 - Line 355-357: To clarify, this refers to posterior predictive checks, right? (Gabry et al., 2019)

R1 Reply 13: It does not seem to be exactly the same as the posterior predictive checks in Gabry et al 2019, because we did not always generate data from the models. We assessed whether the slope of the predicted correlations fell within the data range, and we now explain this in the method section. Posterior predictive checks of the implementation of the Bayesian reinforcement learning model were part of an earlier article (Blaisdell et al. 2021 10.24072/pcjournal.44).

R1 C14 - Line 436-437: shouldn’t it be “equals 0” and “equals 1” respectively, following usual notation of linear models?

R1 Reply 14: Yes, thank you, we changed this to “one from the beginning (𝑟 = 0) and one from the end of the serial reversal experiment (𝑟 = 1)”.

R1 C15 - Line 439: the content between parentheses is very unclear; should that be something like “bird-specific intercepts and bird-specific changes are drawn from a bivariate normal distribution with non-zero correlations between the two”?

R1 Reply 15: We added more explanation to the model, including a definition of all the parameters and the distributions.

R1 C16 - Figure 1: Unless the bird on the figure was drawn by one of the authors, the artist should be credited (and even then, I’d argue; highlight scientist-artists!), and the licence mentioned. (as an aside, if needed, there is one public domain grackle illustration in Phylopic)

R1 Reply 16: the image of the bird that we used had a CC0 license, which is why we didn’t provide photo credit. We replaced it with one of the grackle photos that Dieter Lukas took, and we provided photo credit and the license in the figure caption.

R1 C17 - Figure 2 to 6, 8: “phi” is lower-case in the figure axes labels, but upper-case everywhere else in the manuscript. The legend mentions lines around the points to denote confidence intervals, but these are absent from the actual figure.

R1 Reply 17: Thanks for spotting this. We added the intervals to the estimated values in Figure 2. We changed all the figures to add the correct notation for phi. While we did this, we also changed the color scheme on all of the figures to make them more accessible.

R1 C18 - Figure 4: A tiny design choice makes the figure a bit harder to understand than it ought to be. The use of horizontal but not vertical grid lines make it seem like the horizontal division of the figure plane in 4 parts has a meaning; then readers might wonder, even for a bit, why “unlikely to reach criterion” corresponds to y values lower than 2.5 and “likely” between 5 and 7.5. I would suggest to remove the horizontal lines, plus maybe to color the “likely” and “unlikely” regions of the plot to remove ambiguity

R1 Reply 18: Thank you for the suggestion. We made the changes to the previous Figure 4 - now Figure 3 (removed horizontal lines and added shading to the two regions). We agree that this makes the main message easier to understand.

R1 C19 - Line 858: “IGrackles” should be “Grackles”

R1 C20 - Recurring: as the authors follow most of the usual Bayesian naming conventions, the use of “confidence intervals” should maybe be replaced by “credible”/”compatibility”…

R1 Reply 19-20: We fixed the typo and updated “confidence intervals” to say “compatibility intervals” throughout.

REFERENCES

Edwards, A. M., & Auger‐Méthé, M. (2019). Some guidance on using mathematical notation in ecology. Methods in Ecology and Evolution, 10(1). https://doi.org/10.1111/2041-210X.13105

Gabry, J., Simpson, D., Vehtari, A., Betancourt, M., & Gelman, A. (2019). Visualization in Bayesian workflow. Journal of the Royal Statistical Society: Series A (Statistics in Society), 182(2). https://doi.org/10.1111/rssa.12378

McElreath, R. (2020). Statistical rethinking: A Bayesian course with examples in R and Stan (2nd edition). Chapman and Hall/CRC.

Review by anonymous reviewer 1, 03 Apr 2024 17:10

R2 C0

I apologise for the time it has taken me to provide feedback on the paper. It has been a long time since the original submission, so I admit to reading it almost as a new paper. Overall, I noticed an important effort to improve the contextualisation of the study. However, I still find the paper very difficult to follow. This is not only because of the density of the analyses (there are indeed many, and I would encourage the authors to focus on fewer questions for further work), but also because it seems that the paper is a patchwork of somewhat disconnected analyses (first a modelling test, then a test of the two drivers of reversal ability, and finally a test of how behavioural flexibility is related to performance). In addition, I still believe that the paper in its current form cannot be considered a “stand alone” paper.

R2 Reply 0: Thank you for going through our revised manuscript in such detail. We have changed the framing and structure to refocus the manuscript. For the details, see our replies below.

R2 C1 - This is due, in my opinion, to (a) many references, without brief summaries, to other work by the team (including previous work from which this paper has been extracted). I understand that one cannot repeat everything said in previous papers, and that this part of the paper was not originally intended to stand alone, but a minimum of additional explanation is needed (e.g. L152 or L490-493).

R2 Reply 1: Thanks for this perspective. In our initial attempt to make this a separate, stand-alone, manuscript from the articles we previously published, we had decided to give this manuscript a completely new focus. We now realize that this leads to confusion. We have rewritten the introduction to explain how the analyses we present in this manuscript arose from our previous studies. We added a paragraph in the introduction that summarizes the previous methods and findings, and we build the predictions from these.

R2 C2.1

(b) Some parts relevant only to this paper are still unclear for two reasons. First, the explanations are distilled bit by bit when the authors had to use them. This seems efficient for people who know the whole setting, but not for people who are mostly naive to the whole framework. For example, it would be essential to distinguish clearly and sequentially between (1) the empirical setting, (2) the agent-based model, and (3) the statistical models, rather than following the research question titles and adding each of these parts for each question.

R2 Reply 2.1: We have moved information from the research questions into the rewritten introduction. This helps with setting the frame of the different aspects our manuscript combines. We link the research questions to whether they rely on the agent-based model, the mathematical derivations, or the empirical setting. We also link the questions back to the approaches in the methods, and repeat this information in the result section to clarify what approach each result was based on.

R2 C2.2 - Second, the authors often assume that the readers are experts in the field or even in their study case/framework. They regularly refer to certain elements without having defined them properly beforehand (e.g. “the parameters” L145, which we assume are the ones described at length without any certainty, “the models”, L165, “the performance”, L147). This is true, in my opinion, for the “background” in the introduction or for the methods (e.g. L152, why is 17 out of 20 for a difference from what is expected by chance?), which makes it difficult to follow the reasoning or even to be critical of it.

R2 Reply 2.2: During the rewriting of the introduction and the methods, we paid attention to explaining terms on their first usage. We also checked to make sure that terms are used consistently rather than in different contexts. The additional information on the previous studies we added in the introduction now provides further context on particular decisions taken during the experiment (e.g. that the rule to set 17 out of the last 20 trials as passing criterion was based on this being a significant association according to the chi-square test).

R2 C3 - (c) The paper mixes both “theoretical” and “empirical” efforts. However, a theoretical and an empirical narrative are often different in order to be clearly understood. At present, I feel that the presentation of the theoretical part (e.g. modelling and formulas) lacks a certain formalism. I personally found it very difficult to understand how the modelling works. As a note, I still have mixed feelings about this modelling part overall (see comments on the Bayesian model). I really think that the authors should decide whether this paper should be considered a major “methodological advance” (which I don’t think it is, although it is true that the model may have been tuned for these specific analyses. However, this kind of hand-tuning is often done to adapt to the study system and research questions), or an “empirical test” (which I think it is).

R2 Reply 3: You are correct in that our main aim with this manuscript was to better understand the behavioral flexibility of great-tailed grackles. On the theoretical side, the focus is on the empirical insights that the novel methodology of the Bayesian reinforcement learning model can provide in a context where it previously seems to have not been applied. Accordingly, we still think this manuscript benefits from a description of what the method does and how to apply it, so that others can best judge when and how to adapt it as part of their research. Because the Bayesian reinforcement learning models seem not to have been used on these kinds of temporal data before (and also only very rarely on reversal learning data in general), we feel that we first need to show that the method works to answer the questions we are interested in. In our understanding, this goes beyond ‘tuning’, which is why we added the simulations as a proof of concept. We restructured our introduction to clarify our main aim with this manuscript, and to explain how the different theoretical and empirical parts come together to address this.

R2 C4 - Below, I offer point-by-point comments which I hope will help the authors to see why I have had difficulty in understanding, and therefore evaluating, this piece of research. I admit that I am not the most expert in this area of research, but I hope that these comments will highlight what a more naive reader might find problematic in reading it.

Best regards.

1 MAJOR COMMENTS

Abstract: The emphasis in the presentation is on the methodology used (both the “Bayesian” approach and the combination of simulation and experiments). However, in the presentation of the results, the Bayesian model, the simulation or the experiments used are not explained per se (and sometimes come out of the blue, as in L42-43), nor how they were combined. I would encourage the authors to present their research questions, methods and results in a more step-by-step manner. Moreover, some crucial information is missing (e.g. sample sizes; quantification of the magnitude of effects, such as how the performance of grackles, which is not defined, varies in the puzzle box test). Some terms are also vague (e.g. L35, although somehow understandable after reading the whole paper, the use of “phase” may not be immediately obvious to a novice).

R2 Reply 4: We rewrote the abstract to match the revised structure of the manuscript.

R2 C5 - 1.1 Introduction

• The first paragraph mixes an explanation of the general context (L51 to 55) with a presentation of methodological approaches (serial reversal experiments). I find this confusing. In this line I would move L49-51 to L55, after the citation. Also, I do not see the point of introducing the experimental approach now, and would move this to 1.2 Research questions paragraph 4, where this methodological background is presented.

R2 Reply 5: We restructured the introduction following these suggestions by first introducing the general context, and moving the description of the approaches after the description of the experiments. We also combine the theoretical advances in understanding the potential cognitive processes underlying behavioral flexibility with the methodological advances to estimate these processes with the Bayesian reinforcement learning model.

R2 C6 - I am confused. The wording in the text suggests that the updating of associations is related to the change and reliability of the environment. A contrario, in the figure it is only related to the reliability of the environment, as if a process (updating associations or sensitivity of associations) is related to an aspect of the environment (change over time and reliability). I would encourage the authors to clarify this. In addition, the authors treat φ and λ as separate and even opposite. The problem with this correlation has been raised before, but it is even more glaring here: the current presentation of this background is inconsistent with L494, where this anticorrelation is only “expected”, and challenges research question 3), for which φ and λ may explain the birds’ performance differently (which should be impossible to disentangle if they are indeed negatively correlated). However, as shown in Figure 4, there is a relationship (hence an interaction) of the two parameters together on the performance of the birds. I am sure I am misunderstanding some of this and would encourage the authors to make the link between the compatibility of the statements more explicit.

R2 Reply 6: We recognize that the confusion arose because we had previously mixed predictions for what would be the best behavior during a single reversal with what would be the best behavior during the serial reversal learning experiment. During a single reversal, individuals can show different approaches to gain the reward consistently. These different approaches are characterized by different values of phi and lambda. We illustrate this in Figure 6. However, if individuals experience serial reversals, the range of possible phi and lambda values that lead to an efficient behavior is much more limited. Our focus in the manuscript is now on the behavior at the end of the serial reversal learning experiment. We focus our predictions specifically on this. For this serial reversal learning experiment, where cues are consistently reliable but changes are frequent, we predict and show that individuals need to have large phi values to efficiently obtain the reward. The revised figure (previously figure 4, now figure 3) now shows this dependency on phi. The difference to the previous version of the figure is that we now assume that individuals need to change their associations between cues and the reward, rather than starting from fresh without any previous associations. We think that this distinction between the approaches shown during a single reversal versus the overall efficiency during the serial reversal learning experiment clarifies the previously confusing statements about how phi and lambda might change independently even if both influence the behavior.

R2 C7 - A large part of the introduction is devoted to methodology. However, as far as I understand, this is not a methodological paper. I would therefore encourage the authors to increase the description of the biological scenarios of interest (as presented in the second paragraph) and to reduce the methodological description. In addition, it is sometimes unclear why some aspects are discussed at length (e.g. the three “types” of information L116 to 126 - which I would not actually consider as types), as they do not seem to be “exploited” afterwards.

R2 Reply 7: In the introduction, we increased the description of the biological observations we are aiming to explain. We also reduced the methodological description, combining only the relevant information on how individuals might approach serial reversal learning experiments with what we measure in this study.

R2 C8 - 1.2 Research questions

Overall, the research questions are long and the authors could aim for a more concise pre- sentation.

R2 Reply 8: We have shortened the text under each research question.

R2 C9 - L184 - It is unclear what “abstract” means: how did the models differ from the experiments? Also, I do not understand the argument about differences in estimates between models and experiments. If the models were intended to evaluate the optimal strategy, then it is not necessarily the model structure that may cause differences, but that animals may not behave optimally in nature. Could the authors clarify this statement?

R2 Reply 9: We removed this statement from underneath the research question. We instead integrated the information on what has previously been done, and how our research builds on this, to the introduction. The main difference of our models to the models that were previously published by others is that we investigate the temporal changes in behavioral flexibility that individuals show in response to experiencing particular conditions. The previously published models were evolutionary models, where the authors investigated which combinations of phi and lambda might be expected to be selected over time in different environments. These environments were abstract, setting different fixed rates of changes and reliabilities in the rewards. The evolutionary models therefore do not reflect directly how individuals phenotypically change their behavioral flexibility as they experience their environment, both because of the time scales and because of the choice of parameters to reflect particular environments.

R2 C10 - L199 - Why would φ and λ not interact to describe the performance of individuals? (as sug- gested by the “or”) Question 3) The prediction is not consistent with the research question (which aims to understand which of the factors explains more variation in performance).

R2 Reply 10: We changed the research questions and predictions to match the distinction between the expected influence of phi and lambda on the behavior of individuals during a single reversal and the expected changes during the serial reversal learning experiment (see Reply R2.6).

R2 C11 - L221 - It seems to me that the authors alternate between behaviours and strategies. I think this is not the same thing (a strategy could consist of one to many behaviours to start with) and the authors should stick to one word.

R2 Reply 11: We checked the text to refer to ‘behavior’ when referring to what one individual does, and to ‘approaches’ when comparing different behaviors exhibited by two or more individuals.

R2 C12 - 1.3 Methods

Bayesian model

I find it disconcerting to be presented with the statistical model before the “observational data” (whether from simulation or experiment) are presented. I suggest that the authors restructure the methods in a more classical way, distinguishing between what leads to “data” (simulation scenarios, experimental protocols, etc.) and what is “analysis”.

R2 Reply 12: We changed the structure in the methods to first describe the three datasets we used (simulations, grackle serial reversal, grackle multi-option puzzle box), followed by the reinforcement learning model that is key to all further analyses, before moving on to the specific analyses for each question.

R2 C13 - Furthermore, in this model I notice a lack of the definition of parameters. For example, the interval for defining association values is not mentioned.

R2 Reply 13: Following the advice of reviewer 1, we clarified the mathematical notation of equation 1, adding a clearer index that the equation estimates “how the associations…change from one trial to the next”.

R2 C14 - L305 to 313 As I understand it, modelling association changes across choice events is in- evitable of the mechanistic model used (rather than the explanation offered in L305-306). It is therefore not helpful to me (and perhaps to other readers) to have these after the “Implementation” section. The authors might therefore consider structuring the statistical modelling by distinguishing between (1) the background, with the equations, (2) the param- eterisation, and finally (3) the implementation (i.e. using Stan/R), and (4) the validation.

R2 Reply 14: The description in these lines referred to a separate, mathematical approach. We added explanations about this approach in the method sections of the questions that it is relevant for. We used the equations to derive predictions about expected choices in the reversal learning experiment. This approach is separate from the implementation of the Bayesian reinforcement learning model, which backwards inferred parameters from the observed behavioral choices. We structured the method section to have, after the description of the data, the background, including the equations, of the Bayesian reinforcement learning model. This is followed by the parametrization and implementation. After this, we describe for each of the questions the analyses that were used to address these.

R2 C15 - I don’t understand what a categorical likelihood means. My understanding of this model, and of mechanistic models in general, is that the equations allow the authors to estimate, for each event and individual, the probability of its realisation, given some values of φ and λ. The likelihood can therefore be the product of these probabilities: the best-fitting model (i.e. a particular combination of φ and λ) is identified when this product is maximised.

R2 Reply 15: Apologies for the confusion. We meant to refer to the categorical distribution (or generalized Bernoulli distribution), which we used to determine which of the options individuals would actually choose given their associations. The equations only calculate the probability that an individual will choose a given option. The step using the categorical distribution links the probabilities to which of the different options was observed to be chosen and which was not. We have changed the text to clarify this.

R2 C16 - It is not specified that these models were fitted at the individual level (which they certainly were, as the authors obtained φ and λ for each bird). I think this should be specified.

R2 Reply 16: We now clarified the notation in the equations and models to indicate when a term applied at the individual level.

R2 C1 - L281: Is the logit scale for the values obtained positive? From equation 1, it seems to me that the authors use the inverse logit (or so I assume from “logit scale”) to constrain a value of φ between 0 and 1 (so positive, yes, but not only).

R2 Reply 17: Yes, as also mentioned by reviewer 1, the logit scale was used to constrain the values for phi between 0 and 1 to reflect that this is a proportion. We clarified this in the manuscript.

R2 C18 Question 1) - In these simulations, I don’t see the point of having 32 populations if these populations are (almost) perfectly identical, since they can only differ in the range of the parameters being studied? Also, if any combination of φ and λ is possible, this violates the expecta- tion/description that both parameters are opposite. Could the authors specify what insights can be gained from modelling unrealistic conditions (e.g. high φ and high λ, etc.)? Overall, the explanation of the simulation is not clear enough and relies too much on the previous publication by Logan et al, 2023. I think it is important that the paper can be read without necessarily reading all of the authors’ previous work, and would therefore encourage them to provide more detail on this modelling task.

R2 Reply 18: Following your advice to focus on the empirical aspects, we have reduced the analyses of the simulation data in the manuscript. We now only use these agent-based simulations to assess the power and feasibility of the Bayesian reinforcement learning models to detect changes during the reversal learning experiments. As answer to the question that is raised here, the combination of high phi values and low lambda values is what we learned to be the relevant combination in these serial reversal learning experiments. Other combinations of phi and lambda are not impossible, in fact we would expect all combinations depending on the environment that an individual experiences (for example, low phi and high lambda in an environment that changes rarely but is unpredictable).

R2 C19 - L346-347: Why should a slope of 1 be assumed? In my opinion, it will give evidence of a correlation, but not necessarily a match, if a is not zero.

R2 Reply 19: Yes, thanks, the match is expected if the intercept is at 0 and the slope is close to 1. We have changed this accordingly in the methods and in the results.

R2 C20 Question 3) In order not to break the flow of the paper, I would encourage authors to include the information on research permissions in a different section (e.g. at the end of the manuscript).

R2 Reply 20: Good point. We moved it to a new section at the end of the document called Ethics.

R2 C21 Question 4) - Authors can continue to refer to birds as “bird” or “j” in the equations.

R2 Reply 21: Thanks for this. We now refer to birds as subscript b and made this consistent throughout the manuscript.

R2 C22 - The equations seem inaccessible to most readers. For example, the meaning of MVNormal(), L428, LKJcorr() L430, and the associated explanation (I think for the first one) L439-440 is unclear to me.

R2 Reply 22: We added further descriptions of all the parameters in the models, including explanation for these functions.

R2 C23 Question 6) - What is the unit of latency?

R2 Reply 23: The latency unit is the number of seconds, which we now clarify in the text.

R2 C24 - A correlation between φ and λ may (or may not) affect the quality of the fit (i.e. the value of the estimates). This has been raised before, but I may have missed how the authors investigated it (and thus whether it was consequential or not). Also, there are problems with the colours of the curve and the confidence intervals (they are different). Finally, I do not see the point of clustering the trials, even for “clarity”.

R2 Reply 24: Following your advice to focus on the empirical aspects, we have reduced the analyses of the simulation data in the manuscript. We removed the analyses mentioned here and the corresponding figure.

R2 C25 - 1.4 Results Overall, the readability of the results would be improved by a more concise and ‘recognisable’ notation (e.g. presentation of confidence intervals between square brackets).

R2 Reply 25: We made the results more streamlined by introducing the mean and the 89% compatibility interval on the first mention, and then not mentioning this text thereafter, which greatly reduces the amount of text in this section.

R2 C26 - In my opinion, this first paragraph questions the need to include testing the model as a research question, and I was not fully convinced by the authors’ response. As suggested by the other reviewer, if I am not mistaken, the structure of the simulated data and the model are the same, so we simply expect the model to accurately capture the simulation parameters.

R2 Reply 26: The agent-based simulation reflects the stochasticity in the choices. Based on learned associations, individuals have a certain probability to choose the rewarded option: for example, at the beginning of the experiment, individuals have no information. They are somewhat attracted to both options, because they might be curious, but which of the two options they choose is random. Even after they have experienced both the rewarded and the unrewarded options, they might choose the unrewarded option in any given trial. In addition, an individual that by chance chooses the unrewarded option in the first trial is expected to show a different set of choices afterwards than an individual who did choose the rewarded option. These two factors introduce noise when using the phi and lambda values to simulate choices. On the flip side, because of the probabilistic decisions a given short series of choices (e.g. rewarded [r], followed by non-rewarded [n], followed by r, n, r, r, n, r) can occur even if individuals have different phi and lambda values. Over more choices we might expect differences though among individuals because the underlying associations, and how these translate into choices, will change differently according to the different phi and lambda values. In question 1, we want to know what sample per individual is necessary to re-infer the correct phi and lambda values from the choices despite this noise. We clarified this in the research question and in the methods.

R2 C27 - Figure 3: I have a naive question: since the modelling is based on a log-linear relationship, how come the predicted curves can have a sigmoid-like pattern? Is it because of the logit transformation? But I think this curve corresponds to λ (for which there is no such transformation, am I right?).

R2 Reply 27: We deleted the previous figure 3 (see R2 Reply 24). The non-linear patterns shown in this figure, and some of the other figures which are still in the manuscript, reflect the results from the statistical models, not the Bayesian reinforcement learning model per se. In these statistical models, we sometimes also have different links between the distribution of the outcome and the predictor variables. For example, the statistical model for the results presented in Figure 5 has a log-link, the statistical model for the results presented in Figure 7 has a logit-link.

R2 C28 - Figure 2: I would include the results for both φ and λ. Also, I am unclear as to why only 30 individuals are shown when, if I followed correctly, many more were simulated?

R2 Reply 28: We changed the figure to show the results for all simulated individuals for both phi and lambda.

R2 C29 - L549-553: These sentences are unclear to me. In particular, I do not understand why this is related to the observed shift, nor how this explanation is actually valid.

R2 Reply 29: We clarified how the mis-estimation of the values from small samples affects both phi and lambda in a linked way: The model assumed that the simulated individuals would not require a large phi because a small difference in the associations would already be informative. Individuals would then be expected to consistently choose the option that was just rewarded, and they would because of their large lambda. In addition, these shifts mean that phi and lambda are no longer estimated independently. The model estimated that if, an individual had a particularly low phi value, it would require a particularly high lambda value.”

R2 C30 - L597-624: What research question is this really referring to? It is supposed to answer Q2, but this was not explained in the associated methods. Also, please be more specific in the manuscript in general. What is “this likely trade-off” here? I understand it to be the one between φ and λ, but it seems less clear why this should be likely (despite, I acknowledge, the many previous explanations).

R2 Reply 30: We changed this section 2 to match it to the frame focusing on serial reversal learning experiments. We made the research question more explicit, added details in the methods, and expanded on the mathematical derivations in the result section.

R2 C31 - L689-692: Have the authors explored from simulations the φ and λ estimates when considering multiple reversals? Are these also correct?

R2 Reply 31: The agent-basedsimulation data we used here were initially generated for a different purpose, where we were only interested in the behavior during a single reversal. We have not explored simulations of multiple reversals. With the revised focus of this manuscript on the behavior in serial reversal learning experiments, we therefore removed some of the analyses of the simulated data because they did not speak to the serial reversal paradigm. We did not expand on the simulations because we realized that we can generate more specific predictions also with an analytical approach (question 2).

R2 C32 - L721: I don’t understand what this estimate refers to. Is it the one associated with the variable reversal? In this case, it would be good to add the variable being examined for each estimate.

R2 Reply 32: We have checked the results throughout to explain in the text what association the reported results represent. In this case, we found that “individuals who had higher lambda values in the beginning did not necessarily have higher lambda values than other individuals at the end of the serial reversal learning, with values at the beginning not associated with values at the end (+0.17, -0.67 to +0.97, n=8 grackles).”

R2 C33 - L711-732: Overall, I am confused, because as I understand it, the first lines (L711 to 722) indicate that there is a homogenisation of φ and λ over the experiment between grackles. But then it seems that individuals have different “strategies” overall? Does the strategy refer to the final values or to the changes in φ and λ? If so, why is this a “strategy”?

R2 Reply 33: We removed the reference to strategies, as we can only describe the behavior of individuals (see R2 Reply 11). We also now explain the difference between the variation that might occur among individuals within a single reversal, and the changes that are expected for all individuals experiencing the serial reversal learning experiment (see R2 Reply 6).

R2 C34 - Figure 7: Is the difference between the curves really significant? I do not know what equation was used to calculate this probability (see my comment in the Minor Com- ments for the need to give this information), but certainly a confidence interval can be calculated and should be added to the figure.

R2 Reply 34: We expanded the description of the methods for these mathematical derivations. We added the specific approach to the section of the respective question in the methods. The curves are derived mathematically as exact estimations, they are not estimated from data. With such an approach, we know that the two curves are different, and because they are exact rather than based on samples we also cannot speak about significance.

R2 C35 - 1.5 Discussion L779-807: This is a very long summary of the results. Although appreciated (given the amount of analysis), it would be even more efficient to try to link it to some “ecological” or “behavioural” statement (e.g. avoid mentioning φ and λ, but what they represent behaviourally).