In the field of ecology, there is a growing interest in machine (including deep) learning for processing and automatizing repetitive analyses on large amounts of images collected from camera traps, drones and smartphones, among others. These analyses include species or individual recognition and classification, counting or tracking individuals, detecting and classifying behavior. By saving countless times of manual work and tapping into massive amounts of data that keep accumulating with technological advances, machine learning is becoming an essential tool for ecologists. We refer to recent papers for more details on machine learning for ecology and evolution (Besson et al. 2022, Borowiec et al. 2022, Christin et al. 2019, Goodwin et al. 2022, Lamba et al. 2019, Nazir & Kaleem 2021, Perry et al. 2022, Picher & Hartig 2023, Tuia et al. 2022, Wäldchen & Mäder 2018).

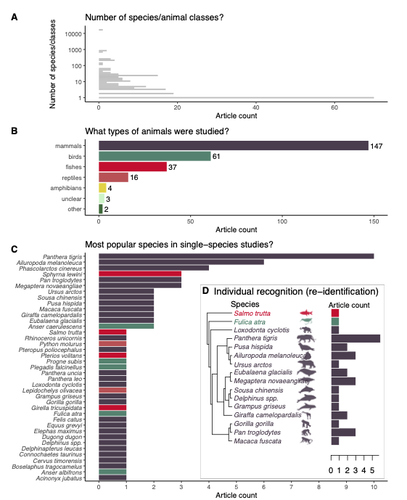

In their paper, Nakagawa et al. (2023) conducted a systematic review of the literature on machine learning for wildlife imagery. Interestingly, the authors used a method unfamiliar to ecologists but well-established in medicine called rapid review, which has the advantage of being quickly completed compared to a fully comprehensive systematic review while being representative (Lagisz et al., 2022). Through a rigorous examination of more than 200 articles, the authors identified trends and gaps, and provided suggestions for future work. Listing all their findings would be counterproductive (you’d better read the paper), and I will focus on a few results that I have found striking, fully assuming a biased reading of the paper. First, Nakagawa et al. (2023) found that most articles used neural networks to analyze images, in general through collaboration with computer scientists. A challenge here is probably to think of teaching computer vision to the generations of ecologists to come (Cole et al. 2023). Second, the images were dominantly collected from camera traps, with an increase in the use of aerial images from drones/aircrafts that raise specific challenges. Third, the species concerned were mostly mammals and birds, suggesting that future applications should aim to mitigate this taxonomic bias, by including, e.g., invertebrate species. Fourth, most papers were written by authors affiliated with three countries (Australia, China, and the USA) while India and African countries provided lots of images, likely an example of scientific colonialism which should be tackled by e.g., capacity building and the involvement of local collaborators. Last, few studies shared their code and data, which obviously impedes reproducibility. Hopefully, with the journals’ policy of mandatory sharing of codes and data, this trend will be reversed.

REFERENCES

Besson M, Alison J, Bjerge K, Gorochowski TE, Høye TT, Jucker T, Mann HMR, Clements CF (2022) Towards the fully automated monitoring of ecological communities. Ecology Letters, 25, 2753–2775. https://doi.org/10.1111/ele.14123

Borowiec ML, Dikow RB, Frandsen PB, McKeeken A, Valentini G, White AE (2022) Deep learning as a tool for ecology and evolution. Methods in Ecology and Evolution, 13, 1640–1660. https://doi.org/10.1111/2041-210X.13901

Christin S, Hervet É, Lecomte N (2019) Applications for deep learning in ecology. Methods in Ecology and Evolution, 10, 1632–1644. https://doi.org/10.1111/2041-210X.13256

Cole E, Stathatos S, Lütjens B, Sharma T, Kay J, Parham J, Kellenberger B, Beery S (2023) Teaching Computer Vision for Ecology. https://doi.org/10.48550/arXiv.2301.02211

Goodwin M, Halvorsen KT, Jiao L, Knausgård KM, Martin AH, Moyano M, Oomen RA, Rasmussen JH, Sørdalen TK, Thorbjørnsen SH (2022) Unlocking the potential of deep learning for marine ecology: overview, applications, and outlook†. ICES Journal of Marine Science, 79, 319–336. https://doi.org/10.1093/icesjms/fsab255

Lagisz M, Vasilakopoulou K, Bridge C, Santamouris M, Nakagawa S (2022) Rapid systematic reviews for synthesizing research on built environment. Environmental Development, 43, 100730. https://doi.org/10.1016/j.envdev.2022.100730

Lamba A, Cassey P, Segaran RR, Koh LP (2019) Deep learning for environmental conservation. Current Biology, 29, R977–R982. https://doi.org/10.1016/j.cub.2019.08.016

Nakagawa S, Lagisz M, Francis R, Tam J, Li X, Elphinstone A, Jordan N, O’Brien J, Pitcher B, Sluys MV, Sowmya A, Kingsford R (2023) Rapid literature mapping on the recent use of machine learning for wildlife imagery. EcoEvoRxiv, ver. 4 peer-reviewed and recommended by Peer Community in Ecology. https://doi.org/10.32942/X2H59D

Nazir S, Kaleem M (2021) Advances in image acquisition and processing technologies transforming animal ecological studies. Ecological Informatics, 61, 101212. https://doi.org/10.1016/j.ecoinf.2021.101212

Perry GLW, Seidl R, Bellvé AM, Rammer W (2022) An Outlook for Deep Learning in Ecosystem Science. Ecosystems, 25, 1700–1718. https://doi.org/10.1007/s10021-022-00789-y

Pichler M, Hartig F Machine learning and deep learning—A review for ecologists. Methods in Ecology and Evolution, n/a. https://doi.org/10.1111/2041-210X.14061

Tuia D, Kellenberger B, Beery S, Costelloe BR, Zuffi S, Risse B, Mathis A, Mathis MW, van Langevelde F, Burghardt T, Kays R, Klinck H, Wikelski M, Couzin ID, van Horn G, Crofoot MC, Stewart CV, Berger-Wolf T (2022) Perspectives in machine learning for wildlife conservation. Nature Communications, 13, 792. https://doi.org/10.1038/s41467-022-27980-y

Wäldchen J, Mäder P (2018) Machine learning for image-based species identification. Methods in Ecology and Evolution, 9, 2216–2225. https://doi.org/10.1111/2041-210X.13075

The revised version of this manuscript addresses all points I made on the previous draft.

https://doi.org/10.24072/pci.ecology.100513.rev21DOI or URL of the preprint: https://doi.org/10.32942/X2H59D

Version of the preprint: 3

Please see the attached reply letter

Dear Dr Nakagawa,

We have now received 2 reports on your preprint written by experts in the field.

I would like to apologise for the tone used by Dr Falk Huettmann which I find aggressive and inappropriate (I might read it wrong, English is not my first language).

Now trying to read between the lines of his report, I guess there is a convergence with the second major issue raised by the other referee, in that the study should be better motivated, the results explored in the light of the existing reviews on ML/AI and the main messages explicitely pitched.

The first major issue raised by the anonymous referee shouldn't be too difficult to address.

I realise that revising your manuscript will require extensive work, but I recommend a revision instead of a rejection cause I'd like to read another version of your work.

Cheers,

Olivier Gimenez

I had the pleasure to read the manuscript by Nakagawa et al which proposes an overview of the use of machine learning algorithms for wildlife imagery. The manuscript is based on an automatic literature survey and intend to answer different questions about the trends in this filed (ML for wildlife image). The paper is interesting and the different steps are well described. Still, the quality of the english writing could be greatly improved (the english is correct but could be more fluid).

However, I have a number of concerns, actually two major issues that I would like to share with the authors, hoping this could help improving the manuscript.

First, the authors explain that this is a "rapid" review ("rapid" is actually used in the title as well). Whereas I understand that it is important to be fast when studying a very active field, I am not really convinced that "rapid" does not imply "incomplete" study. Could the authors tell us what would have been a "not rapid" review, and explain how much it would have been too long to perform? In the manuscript, I was alerted to the quality/exhaustivity of the results when observing that 1/ many figures show decreasing (and misleading) trends for year 2021 compared to the others; 2/ many species were not checked by the authors when collecting the species involved in re-id studies. 1/ On every figures showing paper counts, there is a decreasing slope for 2021. The reader can consider this slope as a trend, whereas this is only because papers after October 2021 were not used. I don't think this is reasonnable to truncate 2021 whereas the data are now available. 2/ It appears that the authors missed a number of papers with their SCOPUS request, at least for species re-id. Species list for re-identification in Figure 1D does not account for birds (Ferreira et al, MEE 2020), giraffe (Buehler et al Eco Info 2019; Miele et al, MEE 2021), elephant (Körschens et al), ray manta (Moskvya et al) nor fruit flies and octopus (Schnieder et al, IEEE 2019). As a consequence, I am still a bit confused about how much the presented results are exhaustive.

Second, I really appreciated the explanations all along the paper about the different questions and the different way of answering these questions. Meanwhile, I would recommend a better presentation of the take-home messages. The paper remains very descriptive (which is great) but it is hard to get the actual messages raised by the analysis -unless I missed something, which is quite possible. Yes, there are many studies using camera traps, deep learning, with people from countries outside of the study site. But how much these results show expected or new trends, how much they inform the reader, how much they complement the reading of nice review papers such as Besson et al, Eco Lett 2022 , Tula et al, Nature Comm. 2022, Christin et al, MEE 2019.

I also have a list of minor points:

- Code sharing is now a prerequisite for many journals. I would have been interested in observing the temporal trend in the percentage of papers with shared code, and possibly by journal (I think fo instance it is mandatory for MEE). Indeed, the present manuscript could highlight an interesting message about how much code sharing practices are adopted by the community.

- Another missing point is the scientific domain of the authors: how frequent are multi-disciplinary teams, involving which disciplines ?

l22: missing coma after "count"

l23: a lack in survey? well, there are nice review papers Besson et al, Eco Lett 2022 , Tula et al, Nature Comm. 2022, Christin et al, MEE 2019

l.29 CONVOLUTIONNAL neural networks

l:.29-30 unclear

l.53 10 years into one week ? it seems overestimated

l.54-64 paragraph with some redundancy

74. The use of "rapid" is pejorative

l.78 'research weaving'? what's the interest of rapid approach then ?

l.88 "analysis code" => "source code to reproduce the analysis"

l.104 typo "it can be" instead of "I can be"

l.105-107 should me moved to introduction.

Alternatively 100-107 could be moved as a whole to the intro

l.116 two commas + why lower case then upper case?

l.118 do we say "thermal imaging" ?

l.123 what is, for the authors, the difference between "recognition" and "classification" ?

Actually the sentence with multiple entry using "/" is not correct

(what would be "individual animal classification" ?)

l.233 "The primary use of machine learning ... followed by individual recognition (19% of studies)" what is this 19% ? I don't think only 19% of studies used machine learning.

l.239 Sentence not clear.

l.309 Is this paper using GBIF relevant for the present bibliographic study? not convinced.

Figure 3: how could the algorithm be "unclear" ?

https://doi.org/10.24072/pci.ecology.100513.rev11

Hello,

thanks for the manuscript (MS) titled 'Rapid literature mapping on the recent use of machine learning for

wildlife imagery' by Nakagawa et al.

I found this work not much relevant or informative, hardly needed.

I have a hard time with the title, "mapping"! as I see no map and just a description of a fast and rapid online search. Already a research design is widely absent, no valid hypothesis formed or tested.

Instead of 'rapid' I propose we can have a THOROUGH and DEEP REVIEW of the topic; that would be better. Why here quick and dirty, and how justified ?

While the MS is well written, it has virtually nothing in there that is not known, or relevant for conservation. It's an incomplete book-keeping effort for the year 2022; how is that science or helps wildlife management and sustainability ?.

The biggest science budgets are in the U.S. (by far), and per capita, in Canada, and later EU. So what's new ? Any science effort - including camera trap data work, relfects nothing but that.

When it comes to 'wildlife', why not using biodiversity and endemic species ? That would be more insightful.

Then, as a key issue, conservation: How about these days we publish wildlife species to death in books, and with ML/AI and camera traps (tons of data), while the actual species, habitats and wilderness are vastly on the decline. So what is really the progress ? Already the CO2 footprint of science, camera traps, imagery, ML/AI and gear is a conservation 'sink' due to the consumption. It's niot sustainable whatsoever.

That's the reality picture, but authors totally are silent - neoliberal- about it. Adding viepoints from Ecological Economics would help here and are expected.The topic of POVERTY is widely ignored, but a key issue worldwide, and for wildlife. One may add the 8 billion people, climate change etc. Myself, I am always concerned when 'spying' is not discussed in such data, e.g. camera traps, drones, planes.

Lastly, I totally agree on the topic of no data sharing in such applications. That's indeed a great topic of failure but widely known and just a marginal result in the MS; see (missing) camera trap data in Antarctica, Svalbard or in GBIF by mandated member nations, and then, see NGOs often being excempt. And include metadata in this dicussion.

Anyways, how does all of this serve mankind, or wildlife better ?

I see nothing relevant or new provided in this MS, just another endless re-chew of things most people know with some R-type graphs (but data are 'rapid' and thus not thorough). May we call this internet research ?

Overall, I find this MS not so informative and poorly thought out. It oversells itself.

Thanks, that's my assessment of this worlk.

Kind regards

Falk Huettmann

https://doi.org/10.24072/pci.ecology.100513.rev12